A crash course on caching: Caching challenges in distributed systems (Part 3/3)

Strategies for addressing caching issues like Thundering Herd, Cache Stampede and Hot Key issues in large-scale systems

This is the final part of the three-part series on Caching. Today, we will focus on common caching challenges and how to mitigate them.

Before we begin, here’s a quick recap of what we covered in the previous parts:

Caching Part 1: What is caching, How it happens at the hardware level, Where should caching be implemented?

Caching Part 2: Distributed Caches, Types of Caching Strategies, Types of Cache Eviction Strategies, Comparison between popular Distributed caches

If you are a recent subscriber, here’s what you might have missed:

Thundering Herd Problem in Distributed Caching

Understanding the Thundering Herd problem

In a high-traffic environment, it’s common for one or more resources—like databases, cache nodes, or application servers—to become overwhelmed by an influx of requests. When this happens, traffic may unintentionally be redirected to a single instance, leading to cascading failures and resulting in service unavailability. This scenario, characterized by a sudden spike in incoming traffic, is known as the Thundering Herd Problem.

The Thundering Herd Problem can be seen in caching in two ways:

Cache Stampede Issue

Cache stampede is a situation that occurs when a popular cached key expires, causing multiple clients to request the same data simultaneously. This surge in requests overwhelms the underlying data store, as each client attempts to fetch or recompute the missing data at once. As a result, the sudden influx of traffic can lead to significant performance degradation and service unavailability.

Figure 2 shows the situation which leads to a cache stampede situation.

Example: E-commerce platforms during flash sales face cache stampede when the product availability cache expires, leading to a flood of DB queries as users check out at the same time.

Cache Avalance in Distributed Systems

Cache avalanche occurs when multiple cached keys in a single node expire simultaneously, or when a cache node goes down, leading to an overwhelming number of requests directed to the underlying data store or other cache nodes.

Unlike a cache stampede, which is triggered by the expiration of a single key, a cache avalanche involves the mass expiration of many keys at once. This scenario can severely strain backend DBs and result in performance issues, as they struggle to handle the sudden surge in requests.

Figure 3 shows one of the situations which leads to a cache avalanche situation.

Solutions to Thundering Herd

There are several strategies to address the thundering herd problem. Let’s explore each one of these in detail

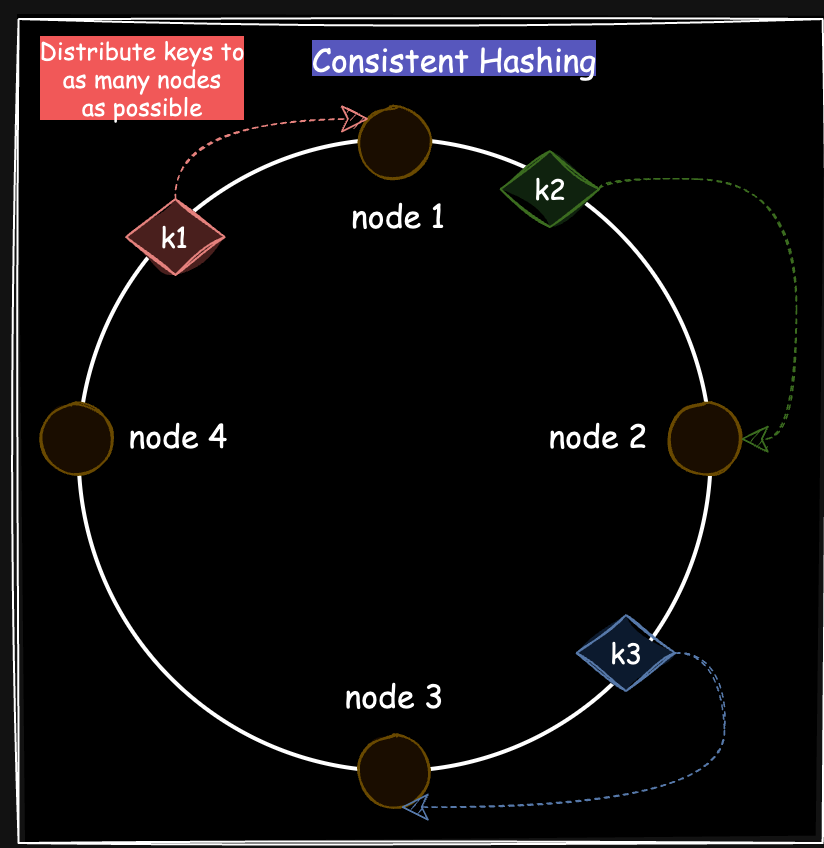

Using Consistent Hashing

As discussed in the previous article on Consistent Hashing, distributing keys across multiple nodes, combined with replication, is crucial for maintaining high data availability in the event of node failures. This approach helps mitigate the effects of cache avalanches and cache stampedes by balancing the load among remaining servers, thereby reducing the risk of a single point of failure.

Implementing Key Locking/Leasing

This approach is similar to mutexes in operating systems, where only one process can access a shared resource at a time. In caching, when multiple clients try to access an expired key, the first request locks the cache to update the key, while subsequent requests wait for the cache to refresh. This helps minimize the number of requests to the backend data source.

Figure 5 shows how this works:

Key Expiration: When a popular key (k1) expires, the first application server (A1) querying it experiences a cache miss.

Lock Acquisition: A1 acquires a lock on the cache for k1, preventing other servers from accessing it until the update is complete.

Request Queuing: Other servers trying to access k1 while it's locked must wait in a queue.

Data Retrieval: A1 retrieves the latest data for k1 from the DB and updates the cache.

Release of Lock: After updating, A1 releases the lock, allowing queued servers to access the updated value.

Applying Random Expiration Policies

To prevent cache avalanche, implement staggered expiration by assigning a random time-to-live (TTL) to each cached key.

This technique effectively spreads out the expiration times of cached entries, minimizing the risk of multiple items expiring simultaneously. By introducing randomness in the TTL, you reduce the chances of overwhelming the backend system with a flood of requests when a cache reset occurs.

Using Circuit-Breaking Techniques

Circuit breaking stops further requests to the database until it is back online and able to handle traffic again.

Request Rate Limiting and Load Shedding

Request rate limiting and load shedding help prevent cache stampede by managing how many requests are processed. These techniques can be applied per user, client, or system-wide to maintain stability during peak loads.

Cache Penetration in Distributed Systems

Overview of the Cache Penetration Problem

Cache penetration occurs when clients, often with malicious intent, send requests for keys that do not exist in both the cache and the underlying database. Figure 7 shows an example scenario:

Requesting Non-existent Keys: Clients attempt to access a non-existent key("k1") which is absent in the cache.

Cache Miss and Database Query: Since the cache does not contain the key, the system queries the underlying DB for that key.

Null Response from Database: The database also lacks this key, resulting in a null response.

Overwhelming the Database: The malicious clients repeatedly send requests for the non-existent key, leading to a flood of queries that overwhelm the database and can degrade its performance.

Example: A news website might face this when many bots query outdated or non-existent articles, resulting in high backend load since the cache never stores these responses.

Solutions for Cache Penetration

There are two ways to mitigate cache penetration. Let’s look at each one of these.

Storing “null“ in cache to reduce load

Store a placeholder value like "null" in the cache for non-existent keys (Figure 8). This prevents unnecessary hits to the database by serving future requests directly from the cache. Set a TTL (Time to Live) for these entries to free up cache space after a reasonable period.

Using Bloom Filters to Prevent Misses

Bloom filters are probabilistic data structures used to quickly check whether a key is absent before querying the cache or database. If the filter suggests that a key isn’t present, it avoids hitting the cache or database, reducing unnecessary load, all while operating in constant time.

That's it for our overview of Bloom Filters for now. I'll explore the topic in more detail in a future deep dive.

Now, let’s return to the challenges associated with distributed caches. 👇

Hot Key Problem in Caching

What is the hot issue?

In a distributed cache system, keys are distributed across different nodes based on the sharding strategy. However, frequently accessed keys may cluster on the same node, leading to congestion. This phenomenon is known as the hot key problem. For instance, keys "k5" and "k6" located in node 2, can potentially overwhelm that node due to high traffic.

Example: If a specific video goes viral on YouTube, the cache for the video metadata might become overloaded as millions of users access the same data, causing delays in responses.

Solutions for Hot Key Problem

Replication for better Load Management

To effectively manage hot keys, it’s important to increase their replication factor beyond the standard approach used in a distributed cache cluster.

For instance in Figure 16, if k5 and k6 are identified as hot keys, create N replicas (k5-1, k5-2, …, k5-N) and distribute these replicas across various nodes. This way, when a read request for one of these keys is made, it can be served from any of the replicas at random, allowing for even traffic distribution.

This strategy helps prevent any single node from becoming overloaded, ensuring smoother performance for high-demand keys.

Applying Rate Limiting for high-traffic keys

To manage excessive requests for a key, you can implement rate limiting by placing additional requests in a wait queue. These queued requests will be processed only after the current ones have been completed successfully.

For a more in-depth understanding of rate limiting, I’ve written separate articles, Rate Limiting Part 1 and Part 2, which include detailed explanations and cheat sheets. I encourage you to check them out and share your feedback 😉!

Large key problem in Caching

What is the Large Key problem?

The large key problem occurs when a cache is used to store oversized data, such as large JSON responses, images, or big data blobs. Figure 17 shows a large key like "k2" with a 2MB payload can cause several issues:

Network Bandwidth: Frequent access to large keys consumes more bandwidth, slowing down lookup times.

Update Overhead: Partial updates to large keys force the entire value to be rewritten, impacting performance.

Cache Eviction: If evicted or invalidated, reloading large keys from storage is slow, further degrading query performance.

Solutions for Large Keys

Using Compression to Optimize Storage

One effective approach to managing large values in caching is compressing them before storage. Compression reduces the memory used by cached data, making it easier to store larger values without consuming excessive cache space.

When a value exceeds a specified size, the system compresses it (using algorithms like GZIP, LZ4, or Snappy) before caching it. On retrieval, the data is decompressed for use.

However, compression adds latency to both read and write operations and can slow down debugging, as values must be decompressed before inspection.

Chunking Large Keys for Better Management

For handling large data structures like JSON, lists, or hashmaps in the cache, one effective strategy is to split the data into smaller pieces and store them under separate keys.

Figure 19 shows how user video metadata (like video views and resume timestamp) is cached.

Instead of storing everything in one large object (eg: user_id -> video_metadata), the key can be split into smaller parts. For instance, user_1_v1_views: 10 and user_1_v1_resume_at: 10:20 to store views and resume timestamps for video v1 in separate keys, reducing the size and complexity of individual entries.

Hybrid Caching Approaches for Large Keys

In some cases, using a hybrid caching strategy with multiple cache layers can be efficient. Smaller, frequently accessed items can be stored in fast in-memory caches like Redis or Memcached, while larger, less frequently accessed data can be kept in disk-based caches, databases, or object storage systems.

For example in Figure 20, instead of storing large objects directly in the cache, the cache holds a reference (like an Amazon S3 URL) pointing to the data stored elsewhere. When large data is needed, the application retrieves it from the external storage using the cached reference.

Using Proxy Clusters for Scalability

A dedicated multi-node storage cluster can sit between app servers and the distributed cache cluster to handle large keys. While slower than in-memory caches, this layer, using NVMe or SSD drives, offers a faster alternative to disk-based storage, maintaining the benefits of caching for large keys.

Due to the added complexity of managing another storage layer, this approach should be considered an outlier. It is worth exploring only when there are many large keys and simpler solutions, like compression or chunking, aren’t effective.

Memory Fragmentation in Caching

What is the memory fragmentation problem?

Fragmentation occurs when free memory becomes scattered in small, non-contiguous blocks throughout the system. This happens over time as data is repeatedly allocated and deallocated in the cache, making it inefficient since these fragmented blocks may be too small to hold new larger cache entries.

This issue is more common in in-memory caches like Redis or Memcached, especially when key-value sizes vary widely or memory reclamation isn’t consistent. As memory becomes fragmented, it can lead to reduced cache performance and difficulty in handling larger items.

Example (Figure 22):

Imagine a Redis cache system with a memory capacity of 1 GB. Initially, small keys of size < 5 KB each are stored in the cache. When a large key (eg: 10KB) is added, it won’t fit in smaller spaces and will start creating small gaps in the system. Even though the cache might have 500 MB of free memory available, it might only be in small chunks like 2 KB, 4 KB, and so on. These fragmented blocks cannot be used to store the larger entries, and eventually, the system runs into out-of-memory errors even though, theoretically, there is still enough memory available.

Solutions to Mitigate Memory Fragmentation

Most of the popular distributed caches already have inbuilt mechanisms to deal with memory fragmentation issues. Let’s look at how Redis and Memcached solve this:

Applying Defragmentation techniques

The Defragmentation/Garbage Collection process compacts memory blocks by relocating data to create contiguous blocks of free space. This can reduce fragmentation and make better use of available memory. Redis, for example, triggers memory defragmentation when it detects high levels of fragmentation, which can be configured through Redis commands like MEMORY PURGE

Using Slab Allocation for Efficient Memory Management

Instead of dynamically allocating memory for each new cache entry, Memcached uses predefined memory chunks (slabs) of fixed sizes to store objects. Objects are grouped by size, and each size group is allocated memory from a corresponding slab. For example, objects of size 16 KB might all be stored in 16 KB slabs, while larger objects are stored in their appropriate size slabs. This prevents small objects from leaving gaps between larger ones, thus reducing fragmentation.

This brings us to the end of this series on caching! This was the longest and the most important part because it shares a lot of practical challenges and mitigation strategies while dealing with caching systems at scale.

Congrats if you made it here 🎉! Just like previous posts, sharing a consolidated cheat sheet on caching problems & solutions 🧷.

PS: Cheatsheets of previous parts of the series — Part 1 & Part 2.

Liked this article? Make sure to 💙 click the like button.

Feedback or addition? Make sure to 💬 comment.

Know someone who would find this helpful? Make sure to 🔁 share this post.

All 3 parts are goldmines, learned a lot.

I had a query related to Redis can I ask here ?