How to use Kafka in system design interviews?

A complete guide to using Kafka in system design interviews for software engineers

In the last post, we went over the basics of Kafka. However, in the design deep dive phase of system design interviews, especially for senior engineering roles (>4 yrs exp), interviewers often expect a deeper understanding of Kafka. Even for junior roles, knowing some of these concepts can give you an edge during the interview. In this post, we’ll explore key Kafka concepts you should know to tackle deep-dive questions in interviews and make the best use of Kafka in your designs.

If you are a recent subscriber, here’s what you might have missed:

Kafka deep dive concepts

How to achieve Scalability in Kafka?

In a Kafka environment, achieving scale relies on optimizing message handling, resource distribution, and throughput. On strong hardware, a single broker can manage around 1TB of data and process roughly 10,000 messages per second (this depends on message size and hardware specs, but serves as a rough guideline). If your requirements are within these limits, scaling may not be necessary. Here’s a breakdown of key strategies for scaling Kafka effectively.

Add more brokers for horizontal scaling

Adding brokers to the Kafka cluster is the most straightforward way to scale. This spreads the load and improves fault tolerance. However, to fully benefit from additional brokers, ensure that your topics have enough partitions to allow for parallelism. Without adequate partitions, new brokers won’t effectively balance the load.

Keep the message size small

Kafka performs best with smaller messages, which enable faster processing and save storage space. This can be achieved this by batching messages and configuring compression on producers using settings like compression.type and batch.size. Sample configs:

message.max.bytes=1048576 # Maximum message size should be 1MB

batch.size=16384 # Maximum size of a batch in bytes

linger.ms=1 # Time to wait before sending the batch

compression.type=snappy # Compression codec

Add more partitions to high-traffic topics in Kafka

For high-traffic topics, increase partition count and replication factor for better load distribution and fault tolerance. Here are ways to manage hot partitions effectively:

Random Partitioning (No Key): Without a key, Kafka randomly assigns partitions, ensuring even distribution but no message ordering. Suitable if order doesn’t matter.

Salting Keys: Add a random number or timestamp to keys (e.g., ad IDs) to spread the load evenly across partitions, though this may complicate later aggregation.

Compound Keys: Combine the primary key (like ad ID) with another attribute, like region or user segment, to balance traffic across partitions.

Back Pressure: Slow down the producer if partition lag is high. Managed Kafka services may offer this by default, or you can manually check and adjust the producer rate.

How to achieve Fault Tolerance and Reliability with Kafka

Consumer rebalancing in Kafka

When consumers fail or new ones join a group, Kafka redistributes partitions to keep messages flowing. This rebalancing keeps partitions actively consumed but can add latency if it happens too frequently. You can control rebalancing speed with session.timeout.ms and max.poll.interval.ms settings to reduce unnecessary delays.

Replication strategies

Kafka's replication factor sets the number of copies for each message across brokers, adding fault tolerance and data protection. A higher replication factor minimizes data loss risk during broker failures but requires more storage and bandwidth. Use KAFKA_REPLICATION_FACTOR when creating topics to match your system’s availability needs.

Processing guarantees in Kafka

Each partition acts as an append-only log, with unique offsets for every message. Consumers commit these offsets after processing, allowing them to pick up exactly where they left off after restarts, ensuring no missed or duplicated messages. Figure 1 shows how Kafka consumers manage offsets

Kafka offers three offset commit methods, each providing distinct processing guarantees:

At-most once processing

In this mode, each message may be processed only once or skipped if there’s a failure, as offsets are automatically committed after polling. With enable.auto.commit set to true, any processing failure means Kafka won’t reprocess the message, which can lead to data loss. This option works best for non-critical data. Figure 2 shows the relevant code snippet.

At-least once processing

This mode ensures each message is processed at least once but may result in duplicates if retries occur after a failure. Here, enable.auto.commit is set to false, and offsets are committed only after processing completes successfully. It’s ideal when data loss is unacceptable, such as in logging applications, even if it means handling duplicate messages. Figure 3 shows the relevant code snippet.

Exactly once processing

EOS guarantees each message is processed exactly once using transactions and idempotent producers (enable.idempotence=true). Consumer offsets are committed only after a successful transaction, preventing both duplicates and data loss. This level of reliability is essential for sensitive applications like financial transactions, where precision is critical. Figure 4 shows the relevant code snippet.

Handling Errors and Retries in Kafka

Kafka retries help prevent message loss during temporary issues like network disruptions. Retries can be set up for both producers and consumers.

Producer retry strategies

Producer retries are configured using the retries (max attempts) and retry.backoff.ms (interval between retries) settings. When a producer faces a temporary issue, such as network instability or a broker being briefly unavailable, these settings allow Kafka to retry sending the message instead of discarding it. See Figure 5 for code example

Consumer Retry strategies

Consumers will attempt to process messages a set number of times before moving them to a Dead Letter Queue (DLQ). Figure 6 provides a detailed breakdown of how the retry process operates on the consumer side, including the transition to DLQ.

Here is a step-by-step breakdown:

Main consumer

A producer sends a message to the Main Topic, and if the consumer fails to process it, the error context is captured and sent to a dedicated Retry Topic, isolating them from the main processing flow.

The original message ID and timestamps help track failures and aid debugging, while error details and service version information help identify recurring issues.

Persistence in MySQL

Failed messages are logged in MySQL with the current retry count and timestamps, helping to analyze failure patterns.

Error messages and service versions provide context for failures, supporting effective troubleshooting.

Scheduled Retries via Airflow

Airflow regularly picks up failed messages to ensure all retries are processed promptly.

Exponential backoff in retries helps avoid system overload during high failure rates.

Dead Letter Queue

If the message still fails processing even after N retry attempts from the retry topic, it is sent to a Dead Letter Queue (DLQ).

DLQ is a separate Kafka topic that stores failed messages indefinitely, allowing developers to analyze them later, determine the failure cause, and fix them. The DLQ ensures no message is lost, providing a fallback for unresolvable errors.

Performance optimizations in Kafka

Use Schema Registry for data consistency

In messaging or event streaming systems, data is often serialized using a schema format before being published to topics or queues. However, as data producers and consumers evolve, they might have different expectations of data formats, leading to issues such as:

Schema Mismatch: Producers may publish data in formats that consumers cannot read if they are using outdated or incompatible schemas.

Maintenance Complexity: Manually updating schemas across multiple applications can be error-prone and challenging to coordinate.

A schema registry is a centralized service that manages schemas for systems such as Kafka. It ensures that both producers and consumers use compatible schemas.

Benefits of using a Schema Registry:

Decoupling Schemas: Producers and consumers can work independently, allowing easier upgrades and maintenance.

Central Schema Tracking: All schemas used in production are stored in a central location.

Versioning Benefits: Facilitates schema changes by managing different versions, ensuring backward compatibility, and minimizing errors during updates.

What is Apicurio in Kafka?

Apicurio Schema Registry is a runtime server that stores and manages schemas for data serialization. Key advantages of Apicurio:

Open Source: Supported by an active open-source community.

Multi-Format Support: Supports Avro, JSON Schema, and Protobuf.

Schema Evolution and Versioning: Provides schema versioning, supporting backward and forward compatibility.

Schema Validation: Validates schemas against predefined rules to prevent deployment of invalid schemas.

How does Apicurio enhance Kafka's performance?

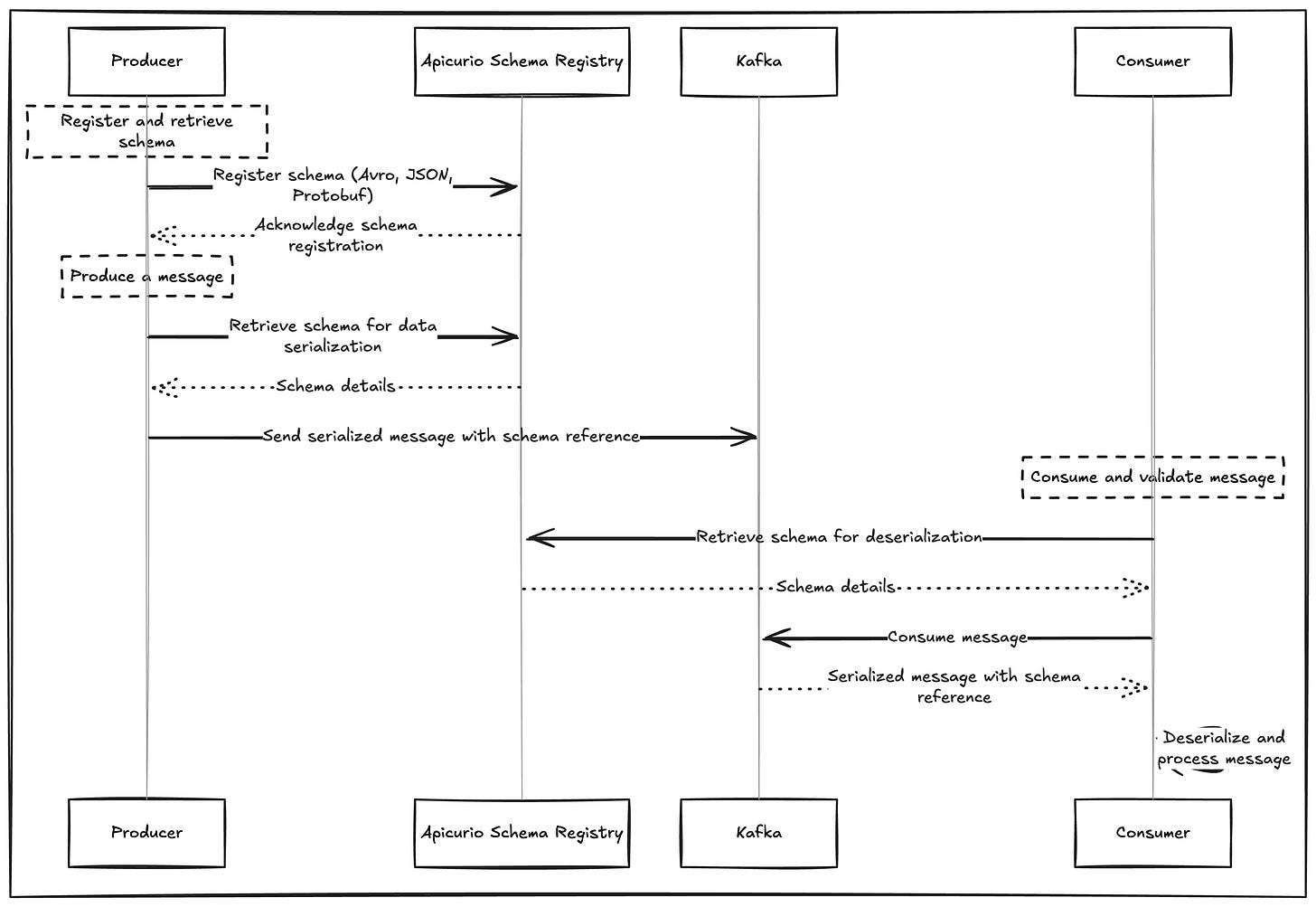

Figure 7 shows the steps involved when using a schema registry:

The producer registers the schema in the Apicurio Schema Registry before producing messages.

Before sending the message to Kafka, the producer retrieves the schema from the registry to serialize data.

The producer sends the serialized message to Kafka with a reference to the schema.

The consumer retrieves the schema from the registry to understand the data format.

The consumer uses the schema to deserialize the message and consume it.

Consumer Lag in Kafka

Kafka consumer lag refers to the difference between the last produced message offset and the last consumed message offset for a particular consumer group (Figure 8). It indicates how far behind a consumer is in processing messages from a Kafka topic. Monitoring consumer lag is crucial because high lag can lead to delays in message processing, impacting system performance and user experience.

What causes consumer lag in Kafka?

Slow Processing: If the consumer application takes longer to process messages, it can lead to lag. For example, a consumer that performs complex data transformations may struggle to keep up with incoming messages.

High Throughput: A sudden increase in message production can overwhelm consumers that are not properly scaled. For instance, during a flash sale, a retail application may receive more order messages than its consumers can handle.

Network Latency: Poor network conditions between Kafka brokers and consumers can delay message delivery. For example, a consumer located far from the broker may face lag due to increased latency.

Consumer Configuration: Misconfigurations, such as having too few consumer threads or setting low session timeouts, can also cause lag. For instance, a low value for

max.poll.recordsmight limit the number of messages a consumer can process at once.

How to mitigate consumer lag?

Scaling Consumers: Increase the number of consumer instances or partitions to handle higher message throughput effectively.

Optimizing Processing Logic: Streamline the consumer application logic to reduce processing time. For instance, break down complex operations or offload some tasks to background workers.

Tuning Kafka Configurations: Adjust configurations like

fetch.min.bytes,fetch.max.bytes, andmax.poll.interval.msto optimize message retrieval and consumption speed.Batch Processing: Implement batch processing to reduce the frequency of consumer polls and improve throughput.

When to use Kafka in system design interviews?

Kafka can be used either as a message broker or a stream processor. As a message broker, it decouples services, ensuring asynchronous communication. As a stream processor, Kafka provides a framework for real-time data transformation, aggregation, and analytics.

Kafka as a message queue

Ticket Booking Waiting Queue

In systems like BookMyShow, Kafka queues ticket requests by user ID and timestamp to process them in the correct order. Each consumer handles requests one at a time to avoid race conditions and ensure fair processing. Failed requests are requeued for retries, so users have a fair chance to secure tickets.

Payment Processing with Kafka: Example from Stripe

Payment services (P2P, Card, B2B) send transaction events to Kafka, which then streams these to different services. For example, Kafka sends transaction data (such as transaction ID, location, and IP address) to the Fraud Detection Service for compliance checks. At the same time, successful transaction events are sent to the Wallet Service for balance updates and to the Ledger Service for accounting. Check out Accounting 101 in Payment Systems for more on accounting system design.

Using Kafka for YouTube video transcoding

For YouTube, Kafka facilitates video transcoding by publishing upload details to a topic for real-time processing. Its replication feature protects against data loss, while multiple consumers handle tasks concurrently, ensuring timely video delivery in various formats. This setup enhances user experience by reducing buffering and enabling support for diverse devices.

Kafka for message streaming

Kafka should be used for message streaming when:

You require continuous and immediate processing of incoming data, treating it as a real-time flow. For example, designing an Ad click aggregator

Messages need to be processed by multiple consumers simultaneously. For example, while designing Facebook live comments, we can use Kafka as a pub/sub system to send comments to multiple consumers.

Congratulations on making it through! With these two guides, you’ll be well-prepared for interviews that require an in-depth understanding of Kafka.

In a recent poll, I asked what topics you’d like to see next, and most of you voted for Kubernetes and Elasticsearch. Upcoming articles will be about these topics. Looking forward to exploring them together!

Liked this article? Make sure to 💙 click the like button.

Feedback or addition? Make sure to 💬 comment.

Know someone who would find this helpful? Make sure to 🔁 share this post.