A crash course on caching: What is caching and how does it work (Part 1/3)

Explore how caching works, the different types of caching (client-side, CDN, API Gateway), and why it’s critical for efficient application performance

Caching is a fundamental technique in computing that enables quick retrieval of frequently accessed data. Research shows that a page load delay of just 0.5 seconds in Google search can result in a 20% drop in traffic. By improving access speed and reducing load times, caching boosts system performance and enhances user experience, directly impacting business outcomes.

Since caching is a broad topic, I will explore its various facets in a comprehensive 3-part series. In this first issue, we will start with the basics and cover the following topics:

What is caching & why is it necessary?

How does caching occur at the hardware layer (CPU/OS Level)?

Where to implement caching?

Real-world examples from large-scale systems

If you are a recent subscriber, here’s what you might have missed:

Before we dive deep, here's a quick caching cheat sheet 🧷.

What is caching and why is it necessary?

What is caching?

Caching is a technique used in computing to store frequently accessed data in a fast storage area, usually in memory. Instead of using a database which can be slow due to disk reads, caching lets the system quickly access the same data. This speeds things up and makes the system run more efficiently.

Example

Figure 2 illustrates a practical example of how caching works. In this scenario, data is first retrieved from the cache (stored in memory). If the data is not found in the cache, it is then fetched from the database. This contrasts with a system without caching, where data is always retrieved directly from the database, leading to longer response times.

Key terms in caching

Cache Hit: When data is found in the cache, it’s called a cache hit, allowing quick access without going to the DB.

Cache Miss: A cache miss happens when the data isn’t in the cache, so the system has to fetch it from the DB.

Cache Hit Ratio: The cache hit ratio measures how effective the cache is. It’s the number of cache hits divided by total requests—higher ratios mean better performance.

Pros & Cons of caching

Pros:

Faster data access: Caching speeds up data retrieval by avoiding slow database or disk access.

Reduces backend load: By serving frequent requests from the cache, it eases the load on databases, APIs, and backend systems.

Better user experience: Faster responses lead to smoother interactions. This improves user satisfaction.

Cons:

Limited capacity: Caches have less space than disks. So, they can't store much data or provide long-term persistence.

Stale data: Cached data can become outdated if not refreshed, causing inconsistencies with the DB.

Higher costs: Storing data in memory is more expensive than on disk, and memory is more limited.

Cold cache delays: When the cache is empty (a "cold cache"), initial data requests may still experience slower response times. Note: We will discuss problems with caching in detail in a later issue.

Caching at the hardware level: CPU and OS caching explained

Hardware caching is built into systems to make data access faster. CPUs and operating systems have caches that help retrieve frequently used data quickly, reducing the need to access slower memory or disks. In this section, we’ll cover how CPU and OS-level caches work to improve system performance.

Figure 3 shows different mechanisms used by the CPU and OS for caching.

CPU Caching

CPUs use multi-level caches to store frequently accessed instructions for faster processing. There are four key types of caches found in the CPU:

L1 Cache - The fastest and smallest cache, typically under 64KB, with each CPU core having its own dedicated L1 cache.

L2 Cache - The second level of caching, ranging from 512KB to 1MB, and also dedicated per core.

L3 Cache - The largest but slowest cache, usually over 3MB, shared between all CPU cores.

Translation Lookaside Buffer (TLB) - Stores recently used address mappings to speed up virtual-to-physical memory translation. It resides in the Memory Management Unit (MMU) and helps optimize memory access.

OS-level caching

Page Cache - Located in main memory, it stores frequently accessed data pages, reducing the need for slower disk access.

Inode Cache - An in-memory cache that stores file metadata (inodes). It speeds up access to file properties like size and permissions. This improves file system performance.

Where should caching be implemented?

Caching can be applied at different points in a system's infrastructure to enhance performance and reduce latency. Key areas include browsers, CDNs, API gateways, databases, and distributed caches. Let’s examine each one to see how caching optimizes system performance.

Client-side caching: Improve user experience and reduce latency

Also known as browser caching, this involves storing static assets like HTML, JavaScript, and images on the client side. This helps speed up page loads by avoiding repeated downloads from the server.

Figure 5 shows how HTML is cached in the browser with an expiration time. Future requests can then be served from the cache, instead of reloading from the server.

Example: When a user visits an e-commerce website, the browser caches images, stylesheets, and scripts. On future visits, these assets load from local storage. This speeds up the experience by avoiding unnecessary downloads.

Types of client-side caching:

Local Storage Cache: Stores static assets such as images and HTML in the browser’s local storage.

Service Worker Cache: Allows applications to work offline by caching responses. It enables features like Progressive Web Apps (PWAs) to function without an internet connection.

CDN caching: Enhancing web performance through Content Delivery Network

A Content Delivery Network (CDN) caches static content such as images, videos, stylesheets, and scripts on servers located in various geographic areas. This helps reduce latency by delivering content from a server closest to the user.

Example: In video-streaming services like Netflix or YouTube, CDNs store video content in different locations. When a user in India watches a movie, the CDN delivers it from a nearby edge server instead of the original source.

Types of CDN caching:

Static Content Caching: Stores static files like images, videos, and scripts for quick access.

Dynamic Content Caching: Some CDNs can also cache dynamic content using methods like edge-side includes or smart caching algorithms.

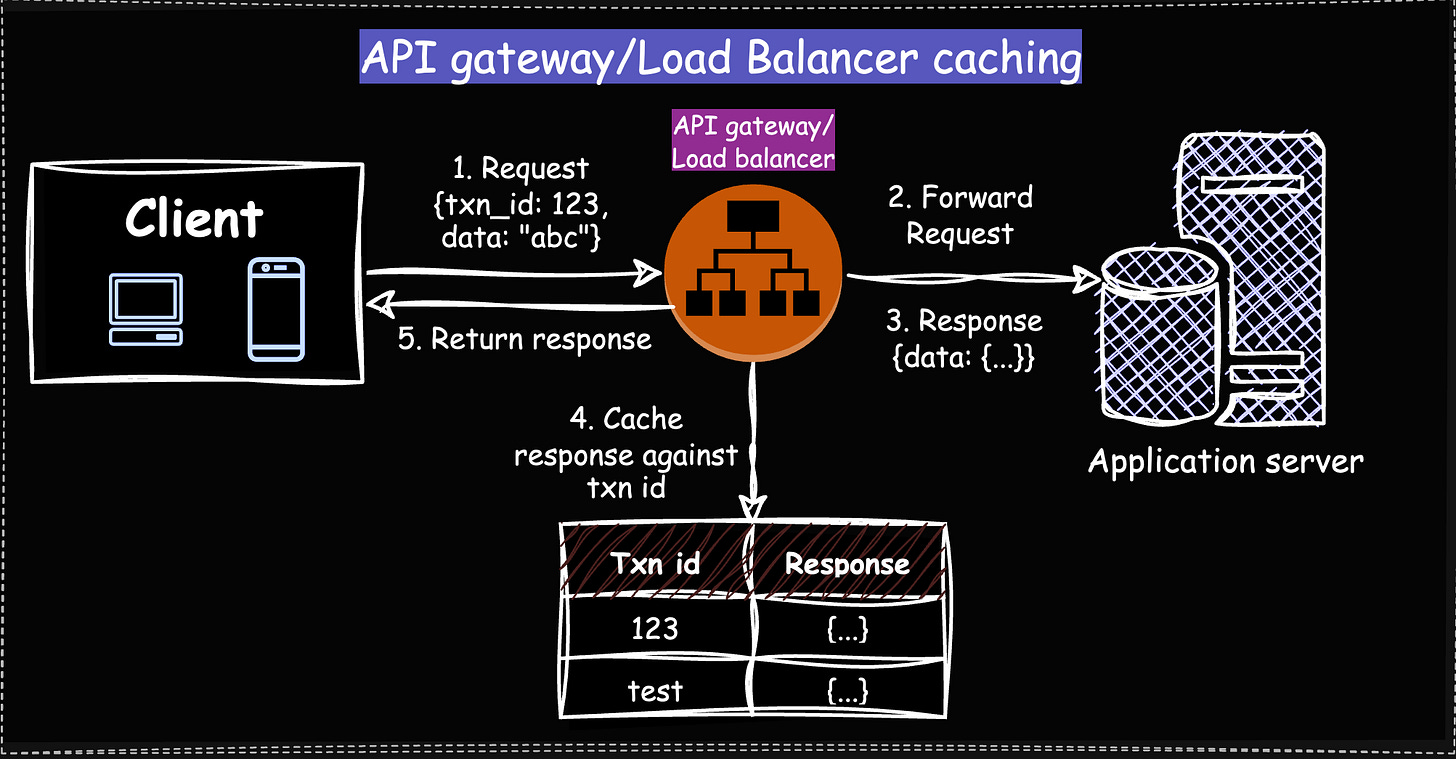

Caching at API Gateway/Load Balancer

API gateways cache responses for frequently accessed API endpoints to reduce the load on backend services. This is ideal for read-heavy APIs with relatively static data.

Example: A currency conversion API that updates its rates every 10 minutes can cache responses at the gateway, reducing the need to hit backend services for every request.

Types:

Per-Route Caching: Caches responses for specific API routes.

Header-Based Caching: Cache can vary depending on headers like user-agent, locale, etc.

Distributed Caching

Distributed caching stores data across multiple nodes. It ensures quick access and high availability in large-scale systems. Highly scalable systems use it to improve access speed across multiple regions or services.

Example: A social media platform may store user session data in a distributed cache (eg: Redis Cluster), ensuring that users can access their sessions quickly regardless of which region they’re in.

Redis and Memcached are two widely used distributed caching systems.

Figure 8 illustrates a typical setup — 90% of read requests from clients are directed to a distributed cache cluster with multiple nodes. This design ensures high availability—if one node goes down, data can still be served from another node that holds a replica. Only 10% of requests go to the database due to cache misses.

Note: We will discuss distributed caching in detail in the next article.

Caching in Relational databases

Relational databases, such as MySQL, use in-memory caching to store query results or frequently accessed data. This approach speeds up subsequent access by reducing the need for costly disk I/O operations or executing complex queries each time.

Example: An e-commerce site could cache the results of a popular query like "top-selling products," which doesn't change often, in a Redis cache to avoid re-executing the same query repeatedly.

Types of caches in relational DBs:

Transaction log: It records all atomic changes made to a database (eg: credit/debit in banking applications). It is used primarily for recovery purposes, ensuring that all operations can be rolled back in case of failure.

Write-Ahead Logging (WAL): WAL is a specific logging technique where changes are written to a log before being applied to the database. This ensures that data can be recovered in case of a crash.

Replication log: They record changes to the primary database. Then, they send these to replicas to ensure consistency in distributed systems.

Bufferpool: An area in memory where frequently accessed data pages from the database are cached. Example: In e-commerce, product information that is accessed frequently is cached in the buffer pool. This allows for quick retrieval at peak shopping times.

Materialized views: It’s a precomputed result set that is stored like a table. It can be refreshed periodically to reflect changes in the underlying data. Example: In a reporting application, a materialized view aggregates sales data for quick access during analysis. This allows users to retrieve summary reports faster than querying the raw data directly.

Caching in message queues for high-traffic applications

Message queues store messages on disk until they are processed by consumers. You can set custom retention policies to keep messages for a specific period. This allows for retries if consumers are unavailable or in case of failures, such as a Kafka outage.

Example: In an e-commerce application, when a user places an order, the order details are sent to a message queue (eg: RabbitMQ or Kafka). The message is stored on disk until a fulfillment service processes it. A retention policy keeps unprocessed messages for a set duration eg: 7 days) to enable retries in case of failures.

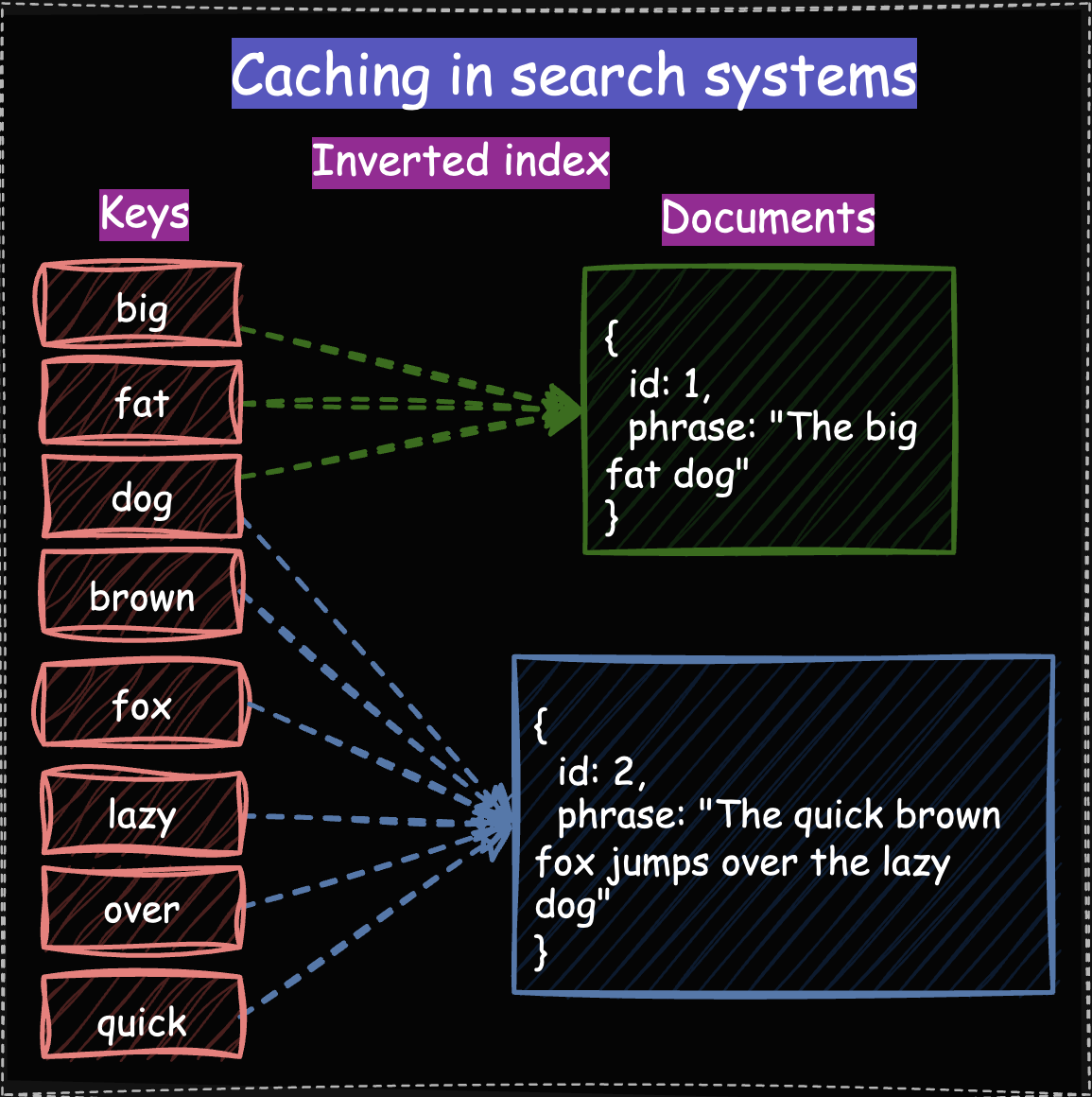

Caching in search systems

Search systems, such as Elasticsearch or Solr, index documents to facilitate quick retrieval based on queries. They use different indexing techniques, including forward and inverted indexing, to optimize search performance.

Types of caching in search systems:

Forward Index

The forward index maps documents to their terms, allowing quick access to the content of each document.

Example: Google Docs maintains a forward index that links document IDs to headings, enabling it to quickly generate the table of contents for billions of documents in real time.

Inverted Index

An inverted index (Figure 11) maps terms to their corresponding documents, allowing for rapid lookups. It is the backbone of most modern search engines.

Example: In a search engine for a news website, the inverted index lists terms like "election," "president," and "vote," with pointers to the articles that contain those terms. If a user searches for "president," the system quickly retrieves all relevant articles without scanning every document.

How Netflix, Facebook, and Twitter leverage caching for efficiency?

Let’s look at some real-world examples of caching in large-scale systems:

Netflix

Content Delivery Network (CDN) : Netflix uses its own CDN, called Open Connect, to cache video content closer to the users. By deploying servers at ISPs (Internet Service Providers), Netflix reduces latency and bandwidth costs while ensuring high-quality video streaming.

Personalization and Recommendations : Netflix caches personalized recommendations and metadata about shows and movies. This allows the recommendation engine to quickly provide relevant suggestions without repeatedly querying the backend systems.

Facebook

Memcached Deployment: Facebook is known for its large-scale deployment of Memcached to cache data retrieved from its databases. This caching layer helps reduce the load on databases, allowing them to scale horizontally.

TAO (The Associations and Objects): Facebook developed TAO, a geographically distributed data store that caches and manages the social graph (relationships and interactions between users). TAO ensures that frequently accessed data, such as friend lists and likes, are served quickly, improving the overall user experience [Resource]

Twitter

Timeline Caching: Twitter caches timelines (feeds of tweets) to ensure that users see updates quickly. By caching these timelines, Twitter reduces the need to query the database for every user request, significantly improving response times.

Redis for In-Memory Caching: Twitter uses Redis for various caching purposes, including caching user sessions, trending topics, and other frequently accessed data.

Thanks for reading 🙏🏻!

This concludes the first part of our 3-part series on caching. In the next issue, we will explore key concepts such as distributed caching, types of caching strategies, and cache invalidation/eviction methods.

Creating each article, along with the accompanying diagrams, takes about 12-14 hours of research and hard work. If you enjoy my content, please ❤️ and subscribe to encourage me to keep writing! 🙏🏻

Well explained 👏

++ Good Post, Also, start here how to build tech, Crash Courses, 100+ Most Asked ML System Design Case Studies and LLM System Design

How to Build Tech

https://open.substack.com/pub/howtobuildtech/p/how-to-build-tech-10-how-to-actually?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/howtobuildtech/p/how-to-build-tech-06-how-to-actually?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/howtobuildtech/p/how-to-build-tech-05-how-to-actually?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/howtobuildtech/p/how-to-build-tech-04-how-to-actually?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/howtobuildtech/p/how-to-build-tech-03-how-to-actually?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/howtobuildtech/p/how-to-build-tech-01-the-heart-of?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/howtobuildtech/p/how-to-build-tech-02-how-to-actually?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

Crash Courses

https://open.substack.com/pub/crashcourses/p/crash-course-09-part-1-hands-on-crash?utm_campaign=post-expanded-share&utm_medium=web

https://open.substack.com/pub/crashcourses/p/crash-course-07-hands-on-crash-course?utm_campaign=post-expanded-share&utm_medium=web

https://open.substack.com/pub/crashcourses/p/crash-course-06-part-2-hands-on-crash?utm_campaign=post-expanded-share&utm_medium=web

https://open.substack.com/pub/crashcourses/p/crash-course-04-hands-on-crash-course?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/crashcourses/p/crash-course-03-hands-on-crash-course?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/crashcourses/p/crash-course-02-a-complete-crash?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/crashcourses/p/crash-course-01-a-complete-crash?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

LLM System Design

https://open.substack.com/pub/naina0405/p/very-important-llm-system-design-577?utm_campaign=post-expanded-share&utm_medium=web

https://open.substack.com/pub/naina0405/p/very-important-llm-system-design-4ea?utm_campaign=post-expanded-share&utm_medium=web

https://open.substack.com/pub/naina0405/p/very-important-llm-system-design-499?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/naina0405/p/very-important-llm-system-design-63c?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/naina0405/p/very-important-llm-system-design-bdd?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/naina0405/p/very-important-llm-system-design-661?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/naina0405/p/very-important-llm-system-design-83b?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/naina0405/p/very-important-llm-system-design-799?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/naina0405/p/very-important-llm-system-design-612?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/naina0405/p/very-important-llm-system-design-7e6?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/naina0405/p/very-important-llm-system-design-67d?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/naina0405/p/most-important-llm-system-design-b31?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://naina0405.substack.com/p/launching-llm-system-design-large?r=14q3sp

https://naina0405.substack.com/p/launching-llm-system-design-2-large?r=14q3sp

[https://open.substack.com/pub/naina0405/p/llm-system-design-3-large-language?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/naina0405/p/important-llm-system-design-4-heart?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

https://open.substack.com/pub/naina0405/p/very-important-llm-system-design-63c?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false