What Are Rate Limiters: Core Concepts, Benefits, and Placement Strategies

Understanding rate limiting: Key benefits, core design concepts, and best practices for where to place rate limiters in system architecture

What is Rate Limiting?

Rate limiting is like a traffic cop for the internet, controlling how often users can perform actions. It prevents server overloads and adds security by stopping malicious activity, ensuring smooth system performance.

Before moving ahead, here's a rate-limiting cheat sheet 🧷.

If you’ve recently subscribed, these articles will help you get up to speed on system design:

Benefits of Rate Limiting

Prevent server overload: A rate limiter blocks excessive requests to protect servers from Distributed Denial of Service (DDoS) attacks.

Prevent resource starvation: It ensures fair access to resources by capping the number of requests users can make, avoiding resource starvation.

Reduce costs: By controlling traffic, rate limiting helps cut down on unnecessary usage, ultimately lowering operational costs.

Designing Rate Limiters: Key Concepts and Principles

Designing a rate limiter involves grasping these essential concepts:

Identifier: Distinguishes individual users or systems, typically using unique identifiers like IP addresses or user IDs.

Window: Specifies the period during which requests are counted (e.g., a minute or an hour).

Limit: Sets the maximum number of requests allowed within the defined time window.

Figure 2 illustrates this concept with relevant examples:

Ex 1: Twitter limits the number of tweets per user to 300 per 3 hours →

[Identifier: user_id, Window: 3 hours, Limit: 300 tweets]

Ex 2: Users can create 10 accounts per day per IP address →

[Identifier: user_id_ip_address, Window: 1 day, Limit: 10 accounts]

Where should rate limiters be placed? strategic points

The next key question is: where should rate limiters be implemented?

This depends on the specific use case. For example, if rate limiting is needed for database queries, it would be placed at the database level. On the other hand, for blocking excessive traffic on high-traffic days, rate limiting could be applied at the CDN or API gateway.

Broadly, there are six key points in the infrastructure stack where rate limiters can be deployed. Let’s dive into each of them:

Rate limiting at Application Servers

This is a common rate-limiting strategy because many rate-limiting needs are custom and often require integration directly into the application code.

Examples:

1. Social Media: Twitter limits the number of tweets per user to 300 per 3 hours.

2. Live Streaming: Hotstar uses custom rate limiting to prevent abuse of the free tier by restricting unlimited access.

Rate limiting at the CDN layer

💡💡 A Content Delivery Network (CDN) is a network of distributed servers that caches and delivers web content closer to users, speeding up load times and improving performance.

Eg: When you watch a video on YouTube, it uses a CDN to deliver videos from servers near you, reducing buffering and improving streaming quality.Rate limiting at the CDN level manages traffic at the edge, blocking excessive requests before they reach your main infrastructure.

Examples:

1. E-commerce: Control new user onboarding during high-traffic days by placing extra users in a CDN wait queue.

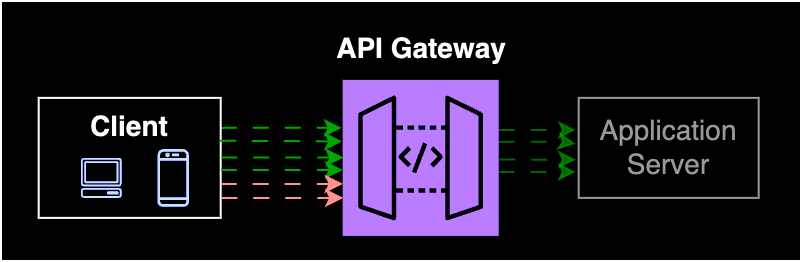

Rate Limiting at the API Gateway

💡💡 An API gateway is a server at the front of your infrastructure that manages incoming client requests and routes them to the appropriate services within your application.

Eg: In a shopping app, the API gateway handles requests for product information, user accounts, and orders, directing them to the appropriate backend services.Rate limiting at the API gateway level controls traffic between client and backend services.

Examples:

1. SaaS: Limiting free users to 1000 API req/day, while enterprise users get 100K req/day

2. Social Media: Instagram blocks repeated scraping attempts from same IP or location.

Rate limiting at the Ingress Gateway level

💡💡 An Ingress Gateway manages and routes external traffic into a Kubernetes cluster, handling tasks like load balancing and SSL termination.

It’s advantageous for efficiently directing traffic to different services, such as routing /user requests to one service and /payment requests to another.Rate limiting at Ingress Gateway controls traffic between API gateway and ingress gateway OR between internal services as shown in the diagram

Examples:

1. Internal dashboards: If both client applications and internal dashboards access the User Details API, rate limiting at the ingress gateway can prioritize requests from client-facing applications while restricting access for internal dashboards.

2. E-commerce: Limits requests between Order Service and Inventory Service to prevent overload.

Managing traffic with rate limiting at the database

Rate limiting between Application servers and Database servers protects the DB from overload by limiting heavy query traffic.

Examples:

1. Limiting the no. of simultaneous DB connections to ensure fair access for all application replicas.

Rate limiting at Egress Gateway for outbound control

💡💡 An Egress Gateway manages and routes outgoing traffic from a Kubernetes cluster, handling tasks like rate limiting and connection monitoring.

It’s advantageous for controlling traffic to external services, such as enforcing security policies for outbound connections and managing bandwidth usage effectively.Third-party services often enforce their rate limits, causing excess requests to fail. Rate limiting at the Egress Gateway helps prevent this by capping outgoing requests and managing network resources effectively.

Examples:

1. Oauth: Google caps the number of OAuth requests from a third party during high-traffic login periods.

Conclusion

Thanks for reading! In this post, we’ve covered why Rate Limiting is important, its core concepts, and where to place rate limiters in your infrastructure. It’s important to:

Understand the scope of the rate-limiting problem to determine the appropriate infrastructure layer for implementation.

Communicate rate limits clearly to API users, ideally through response headers, to enable effective retry and back-off strategies.

In the next post, we’ll explore key rate-limiting algorithms and how to choose the right one for your needs, accompanied by a cheat sheet 😉.

Liked this article? Make sure to 💙 click the like button.

Feedback or addition? Make sure to 💬 comment.

Know someone who would find this helpful? Make sure to 🔁 share this post.