What Are Rate Limiters: Key algorithms for better traffic control

This guide covers rate-limiting algorithms such as token bucket, leaky bucket, sliding window and more, along with the types of rate-limiting actions used for effective API traffic management.

In what are rate limiters, we covered two key aspects: core concept and optimal placement.

In this post, we'll dive deeper into:

Different types of rate-limiting actions

Key rate-limiting algorithms with their pros & cons.

Before we begin, here's a quick rate-limiting cheat sheet 🧷.

Types of rate-limiting actions

When building a rate limiter, it’s crucial to determine whether your use case requires rejecting excess requests outright or handling them with a delayed or lower-quality response. Generally, rate limiters perform one of three actions (Figure 2) on excess requests:

What is Blocking in rate limiting?

Excess requests exceeding the limit are denied access to the resource. It is commonly expressed as an error message such as HTTP status code 429 (Too Many Requests).

Examples:

Social media platforms like LinkedIn and Instagram return a 429 error when detecting web scraping attempts to prevent misuse.

Too many login attempts on public APIs (gmail login) are rejected to prevent brute-force (DDoS) attacks.

How does Throttling happen in rate-limiting?

At times, responses to requests from certain clients need to be throttled by redirecting them to lower-quality servers. This reduces the strain on high-performance resources while still maintaining service availability.

Examples:

Streaming platforms like Hotstar and Netflix reduce stream quality for users who exceed their data cap to manage bandwidth.

Cloud storage services like Google Drive slow down upload speeds for users who surpass their bandwidth limits.

What is Shaping in rate limiting?

Shaping involves directing requests from certain clients to a low-priority queue, causing their processing to be intentionally delayed.

Examples:

During high traffic days, CDNs put additional users in a wait queue, whose requests are processed only after previous users are done processing

In online gaming events, free-tier users may face delays in match-making as paying subscribers are prioritized for faster access.

Types of rate-limiting algorithms

Token Bucket Algorithm

How does Token Bucket work?

Tokens are added to a bucket at a constant rate.

Each incoming request consumes one token from the bucket.

Requests are denied if the bucket is empty and no tokens are available.

Pros & Cons of Token Bucket

✅ Simple to implement and understand.

✅ Accommodates short-term request spikes up to bucket capacity.

❌ Doesn’t ensure a perfectly smooth request.

Leaky Bucket Algorithm

How does Leaky Bucket work?

Requests are added to a fixed-size queue and processed at a steady rate.

Requests are discarded if the queue is full.

Pros & Cons of Leaky Bucket

✅ Requests are processed steadily, preventing sudden bursts from overwhelming the system.

❌ Slightly more complex to implement compared to Token Bucket.

Fixed Window Counter Algorithm

How does Fixed Window Counter work?

Stores the number of requests in the current window and limits them (eg: 100 requests per minute).

When a new request arrives, it's either accepted or rejected, depending on whether the current window can accommodate it.

Pros & Cons of Fixed Window Counter

✅ Simple to understand, and clear rate limits for each time window.

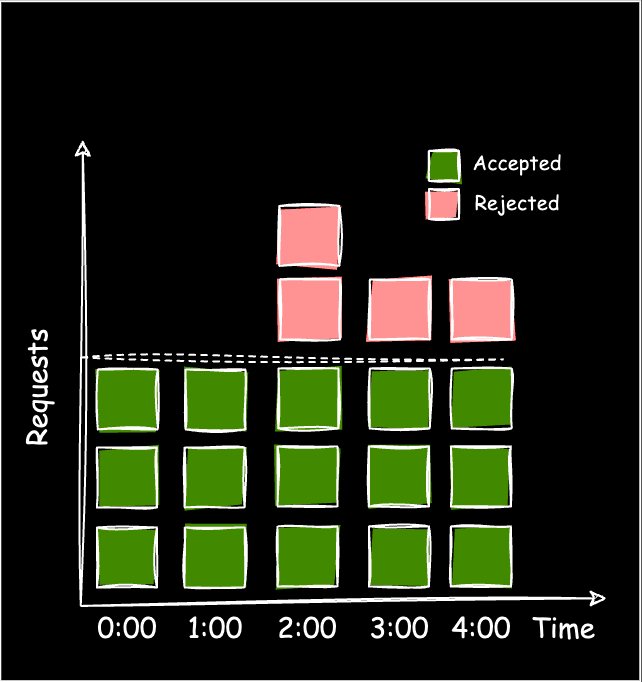

❌ Poor handling of boundary bursts; can allow twice the rate of requests at edges (shown in Figure 5).

Sliding Window Log Algorithm

This is the most commonly used rate-limiting algorithm.

How does Sliding Window Log work?

Records the timestamp of each incoming request.

Upon a new request, expired timestamps from previous windows are cleared.

The number of requests in the current window is counted. If it’s below the allowed limit, the request is accepted and logged; otherwise, it’s denied.

Pros & Cons of Sliding Window Log

✅ Precise and smooth rate-limiting with no edge cases.

❌ Memory-intensive for high-volume APIs as it requires storing all timestamps.

Sliding Window Counter Algorithm

How does Sliding Window Counter work?

This approach combines Fixed Window Counter and Sliding Window Log algorithms.

Instead of recording every request timestamp, only the total number of requests in the previous fixed window is logged.

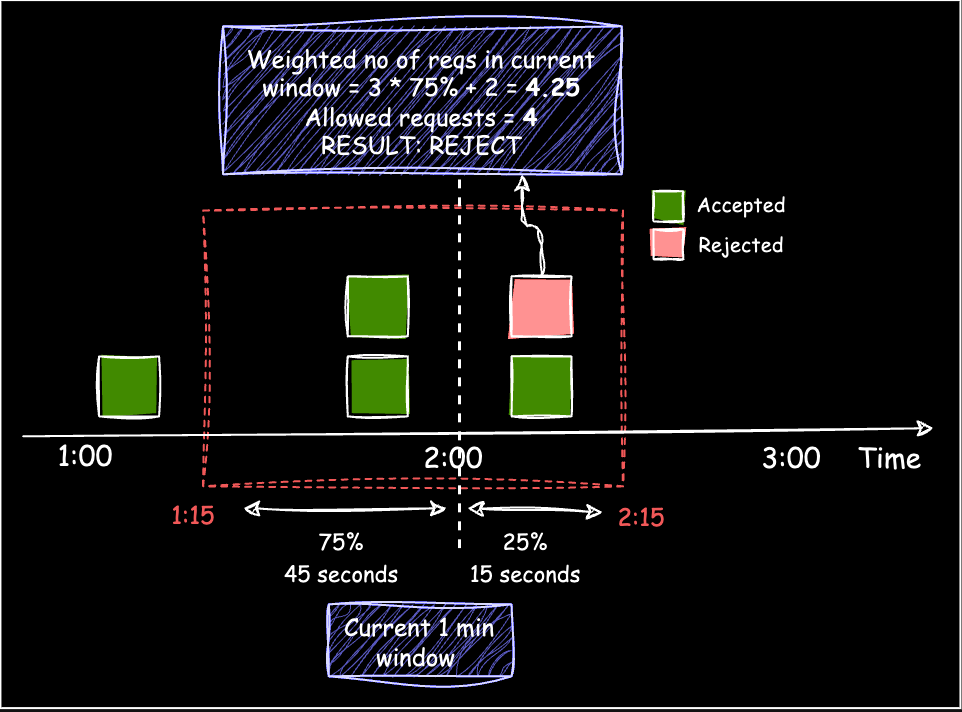

When a new request arrives, the “weighted number of requests” for the current window is calculated. For example (Figure 7):

Assume Window size: 1 min, Window capacity: 4 requests/min, and a new request arrives at t=2:15

The current window (1:15 - 2:15) consists of 75% of the previous window (1:15 - 2:00) and 25% of the current window (2:00 - 2:15).

Instead of tracking all timestamps, only the request count in the previous fixed window is logged.

The “weighted number of requests” is calculated as:

requestsInCurrentWindow + (weight * requestsInPreviousWindow) = 2 + (0.75 * 3) = 4.25.Since adding the new request would bring the total to 4.25 (exceeding the limit of 4), the request is rejected.

Pros & Cons of Sliding Window Counter

✅ More accurate than Fixed Window Counter

✅ More memory-efficient than Sliding Window Log because it only tracks the total number of requests per window, rather than storing individual timestamps for each request.

✅ Smooth out edges between windows.

❌ Slightly complex to implement.

Thanks for reading! I hope this helped clarify the different types of actions and algorithms used in designing a rate limiter. However, two key factors to consider, based on your use case are:

Choosing the right rate-limiting algorithm will depend on the specific behavior and needs of your system.

The data structure used to store rate limiter data should be chosen with efficiency and scalability in mind.

See ya in the next one 👋🏻!