What is Kafka? Key components explained

How Kafka Works: A Deep Dive into Kafka’s Architecture, Components, and Data Streaming Use Cases

Introduction to Kafka

Building high-performance systems requires a deep understanding of reliable messaging solutions like Apache Kafka. In this series, we’ll explore Kafka’s foundational elements and advanced features such as schema registry, commit strategies, and retry mechanisms, helping you design notification systems and event-driven architectures that can handle heavy data traffic.

As an early engineer at Barq, I’ve worked on scaling our systems to support 2 million users and have witnessed Kafka’s scaling challenges firsthand. I tried to look for a practical, interview-focused Kafka guide but couldn’t find any resource that fully addresses the real-world issues engineers face.

Cracking system design interviews covers how the "Design Deep Dive" part often decides interview outcomes, especially for senior roles. While How to use Redis in system design interviews covers Redis in detail, this two-part series will focus on Kafka—a component that often plays a central role in scaling discussions.

If you are a recent subscriber, here’s what you might have missed:

List of topics covered in this 2-part series:

Before diving in, I want to share that I focus on making each piece of content as relevant and valuable as possible for system design interviews. That’s why I take the time to produce only in-depth, long-form content.

With that in mind, it would mean a lot if you could take a moment to answer a quick poll. Your input will help me choose the topics that matter most to you👇.

Basics of Kafka

Apache Kafka is a distributed event-streaming platform created by LinkedIn and later open sourced by the Apache Software Foundation. It’s built to handle large volumes of data in real time, enabling multiple applications to share and process data streams efficiently.

Unlike tools that process data in batches at set times, Kafka allows data to be processed continuously as it arrives, which is crucial in areas like e-commerce, IoT, social media, and finance.

Let’s look at a relatable example to understand how Kafka is used.

A real-world example of Kafka

Consider a ride-hailing platform like Uber, where each ride generates a stream of updates: the driver’s location, trip status (e.g., started, ongoing, completed), and the estimated time of arrival (ETA).

These updates are placed in a queue by a process known as the producer. On the other side, another process called the consumer reads from this queue and performs its business logic.

Let’s look at the problems with this simple setup (Figure 2).

Problem 1: Scaling to Millions of Rides

Uber handled nearly a million rides per day in 2023. For each ride, many events are generated at once, creating a huge volume of data. A single queue can’t handle this volume.

Solution: To manage this, Kafka allows data to be grouped into topics. Different types of updates are sent to separate topics, as shown in Figure 3:

driver-locations: For live location updates of drivers.

trip-updates: For ride status updates (start, ongoing, or completed).

Problem 2: Consumers Overwhelmed by Location Data

With so many location updates coming in, a single consumer quickly becomes overloaded, slowing down processing and causing delays.

Solution: Kafka allows multiple consumers to work together in a consumer group, where each event is handled by only one consumer in the group. For example, Figure 3 shows the driver-location-group which has two consumers, each handling a part of the data, speeding up processing and avoiding duplication.

Problem 3: High-Volume Data and Processing Delays

A global platform like Uber has thousands of rides happening at once. If all updates go to the same topic, it can create bottlenecks, slowing down tasks like ETA prediction.

Solution: Kafka lets us split topics into partitions based on specific keys (Figure 4). For example, the driver-locations topic can be divided by driver ID, so each driver’s updates go to a separate partition. This balances the workload, reducing delays and making sure each update is processed promptly.

Why choose Kafka? Key benefits in distributed systems

Scalability: Kafka can handle millions of messages per second by spreading data across multiple servers, making it suitable for high-traffic applications.

Reliability: Kafka’s replication ensures that data remains available even if some servers fail, providing strong reliability for critical applications.

Independent Communication: Kafka’s design allows services to send and receive messages independently, enabling multiple services to access the same data without direct connections.

Data Persistence: Kafka can store data for a set time, letting services replay past data when needed, which is useful for reprocessing after failures or for accessing historical data.

Kafka components explained

In the ride-hailing example above, we highlighted several components through a problem-solution approach. Now, let’s explore each of these components in greater detail.

Figure 5 shows a high-level architecture with all the components in a Kafka setup.

Kafka topics

A topic is a stream of data where a specific type of data is stored. For instance, in the ride-hailing example we discussed above, different topics were created for specific use cases (eg: “driver-locations” or “trip-updates”)

Kafka has configurable retention policies for topics. For example, a topic can be set to keep data only for a few days, after which the older messages are automatically deleted.

Kafka partitions: a key to parallel processing

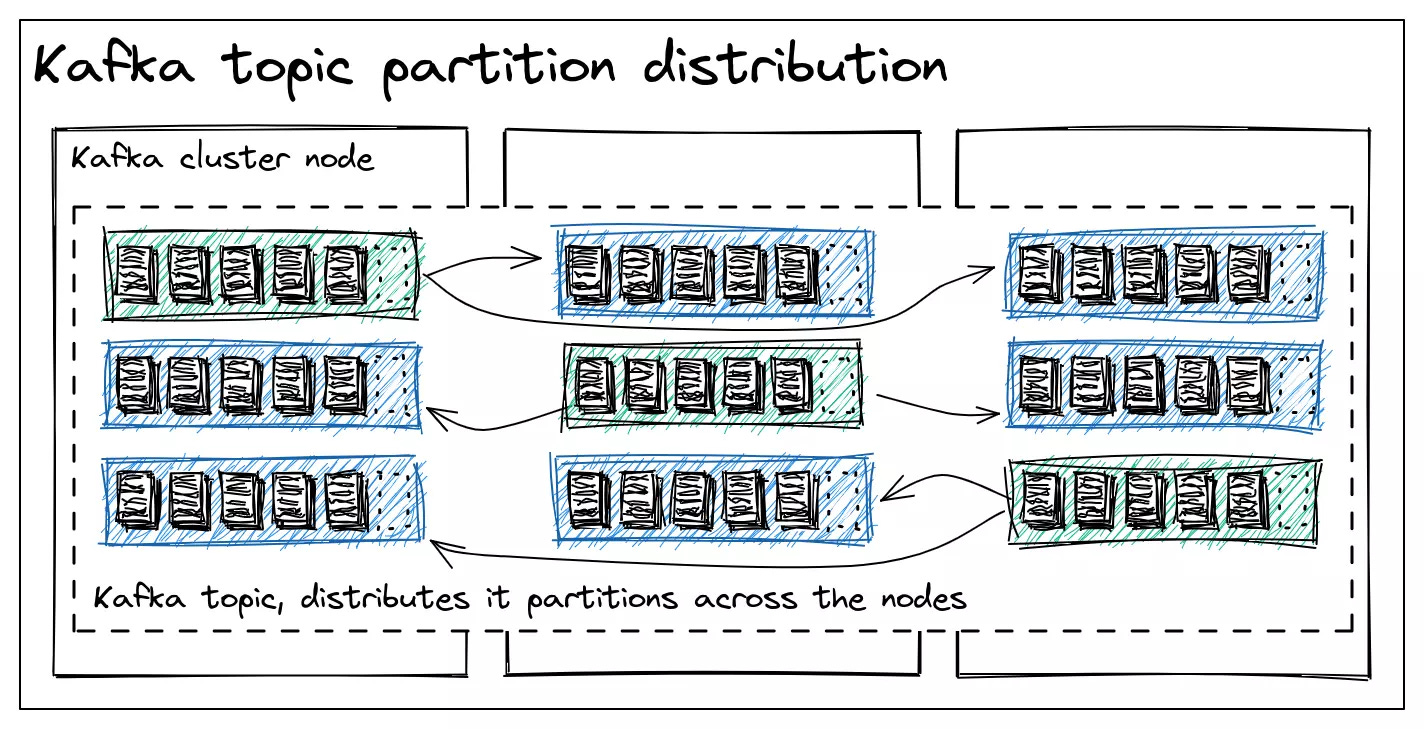

Each topic can be split into multiple partitions, allowing messages to be processed independently. This makes Kafka scalable because messages are spread across multiple servers instead of all being stored in one place.

Partitions are replicated across different servers (or “brokers”) in a Kafka cluster. This replication ensures that if one server fails, other servers have copies of the data, making Kafka resilient against data loss and system failures.

Kafka brokers: distributing and managing data streams

Brokers are the servers in a Kafka cluster that handle data storage, processing, and retrieval. Each broker stores one or more partitions of a topic and handles requests from clients (producers) and servers (consumers).

In Kafka, each partition has one broker assigned as the leader, which manages the read and write operations. Other brokers have replica copies of the data in the partition but don’t process requests unless the leader fails.

Kafka producers: creating and sending messages

Producers are services or applications that send messages to Kafka topics. They decide which topic and partition to send data to, often based on a unique key (eg: driver ID). This ensures that messages related to the same entity (like a driver) are sent to the same partition, keeping their order.

Producers can be set up with SSL/TLS for secure data transfer. Additionally, they can be configured to prevent duplicate messages, ensuring that each message is sent only once, even if there are retries.

Kafka consumers: reading and processing data streams

Consumers are services or applications that read messages from Kafka topics. They consume messages at their own pace, which allows them to process data independently and resume from where they left off if interrupted.

Each consumer tracks its “offset” which represents the last message it successfully read. By tracking offsets, Kafka enables consumers to resume from the correct position in the event of a failure.

Consumers can be grouped into “consumer groups” allowing Kafka to distribute messages across multiple consumers. For example, if you have a group with three consumers, Kafka will assign each partition of a topic to a different consumer in the group. This setup ensures each message is processed only once within the group.

Zookeeper: ensuring fault tolerance and scalability

ZooKeeper is a separate service used to coordinate the Kafka brokers. It stores metadata about the cluster, including which broker is responsible for each partition.

When a broker fails, ZooKeeper helps reassign leadership of its partitions to another broker, ensuring Kafka continues to operate smoothly.

How does Kafka work?

Step 1: How are messages produced in Kafka?

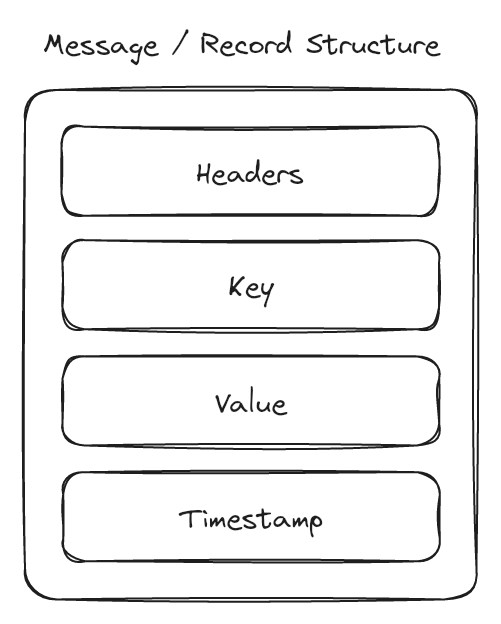

When an event occurs, a Kafka producer formats a message (often called a record) and sends it to a Kafka topic. Each message includes a required field (value), which contains the main data, and three optional fields:

Headers: These are optional key-value pairs that store metadata about the message, such as HTTP headers.

Key: Used to specify which partition the message should go to, ensuring that all messages for a use case go to the same partition.

Timestamp: Marks the time the event occurred, helping Kafka to maintain chronological order within each partition.

For example, if a producer is set up to send messages on driver locations, it might send a message with the driver’s ID as the key, the current GPS coordinates as the value, and optional metadata (eg: device type) in the headers.

Step 2: How does Kafka partition messages?

Kafka decides the partition for each message depending on whether a key is present or not:

If the key is specified, Kafka applies a hashing algorithm to the key, ensuring that all messages with the same key end up in the same partition.

If no key is provided, Kafka can use a round-robin approach to distribute messages randomly across the available partitions or a different partitioning logic configured by the producer.

Step 3: Broker assignment in Kafka

After Kafka decides on a partition, it identifies the broker that holds that partition through the metadata it maintains about the cluster. The producer uses this information to send messages directly to the broker responsible for the intended partition.

Step 4: Kafka’s message storage

Each partition in Kafka functions as an append-only log (Figure 8), where messages are added sequentially at the end of the log file. This log structure is central to Kafka’s architecture for several reasons:

Immutability: Once written, messages cannot be changed or deleted, avoiding inconsistencies.

Efficiency: Writing messages to the end of a log file minimizes disk seek times, making it a quick operation.

Scalability: The simplicity of the append-only log allows Kafka to scale horizontally by adding more partitions across multiple brokers.

Figure 9 shows sample producer code using kafkajs, a popular Node.js client for Kafka

Step 5: Managing message offsets in kafka

Each message in a Kafka partition is assigned a unique sequential offset, which helps consumers track their position as they read messages from the topic (Figure 10). By keeping track of offsets, consumers can resume reading messages from the last known position if there is an interruption or failure, ensuring that no messages are lost or skipped.

Consumers can have different types of offset commit strategies, which I will cover in the next article.

Step 6: Kafka replication for fault tolerance

Kafka uses a leader-follower model for replication to ensure data durability and availability.

Leader Replica: Each partition has a leader replica located on one broker, which handles all read and write requests.

Follower Replicas: Each partition also has follower replicas on different brokers that are ready to take over if the leader fails.

Synchronization: Followers continuously sync with the leader to ensure they hold the latest data. If the leader replica for a partition fails, one of the in-sync followers is promoted to become the new leader, minimizing downtime.

Zookeeper is responsible for managing this replication process, monitoring broker health, and reassigning leadership when a broker goes down.

Step 7: Consuming messages in Kafka

In most setups, consumers poll Kafka to fetch new messages, allowing them to control the pace of consumption. Consumers can be grouped into consumer groups to handle high message volumes where each partition is read by only one consumer.

For example, if multiple replicas of a service need to process driver location updates from a driver-location-updates topic, each replica can be in the same consumer group, with each service reading from separate partitions.

Figure 11 shows a sample consumer code using kafkajs

Kafka messaging strategies: acks=0, acks=1, acks=all

In Kafka, message delivery mechanisms are controlled by the acks parameter in the producer configuration. This parameter sets the number of acknowledgments required from the Kafka cluster before a message is considered successfully delivered. The value of acks can be 0, 1, or all. Let’s break down each one of these:

acks=0: Unacknowledged message strategy in Kafka

The producer sends the message without waiting for any acknowledgment from the broker.

Pro: Since the producer doesn’t wait for a response, message delivery is fast.

Con: There’s no message delivery guarantee since there is no confirmation the message reached the broker.

Best for non-critical data like logging or metrics where losing some messages is acceptable.

acks=1: Single acknowledgment messaging strategy in Kafka

The producer waits for an acknowledgment from the leader broker once it has written the message to its local log.

Pro: Since the leader broker acknowledges message receipt, this setting reduces the chance of data loss compared to

acks=0.Con: Waiting for an acknowledgment from the leader introduces a slight delay.

acks=all: Ensuring full replication in Kafka messaging

The producer waits for acknowledgments from all in-sync replicas (ISRs), ensuring that the message is fully replicated across the cluster.

Pro: Guarantees the strongest delivery guarantee by waiting for all in-sync replicas to acknowledge the message.

Con: Waiting for all replicas to confirm receipt can introduce significant delay, as network and processing time increases.

Necessary for strict use cases such as financial transactions.

Practical use cases of Kafka

Using Kafka for Logging, Monitoring & Alerting

Log/metrics collector agents such as OpenTelemetry push logs and metrics from application services to ElasticSearch (for indexing) via Kafka. Kibana helps in visualizing logs, while Apache Flink helps in aggregating and visualizing metrics.

Kafka for data streaming in recommendations

Kafka processes real-time user clickstreams to enhance recommendation systems. User activity data is ingested into Kafka, which routes it to Flink for processing, enabling real-time aggregations and calculations. The processed data is sent to a Data Lake from where it’s fed to machine learning models to generate recommendations based on user interactions.

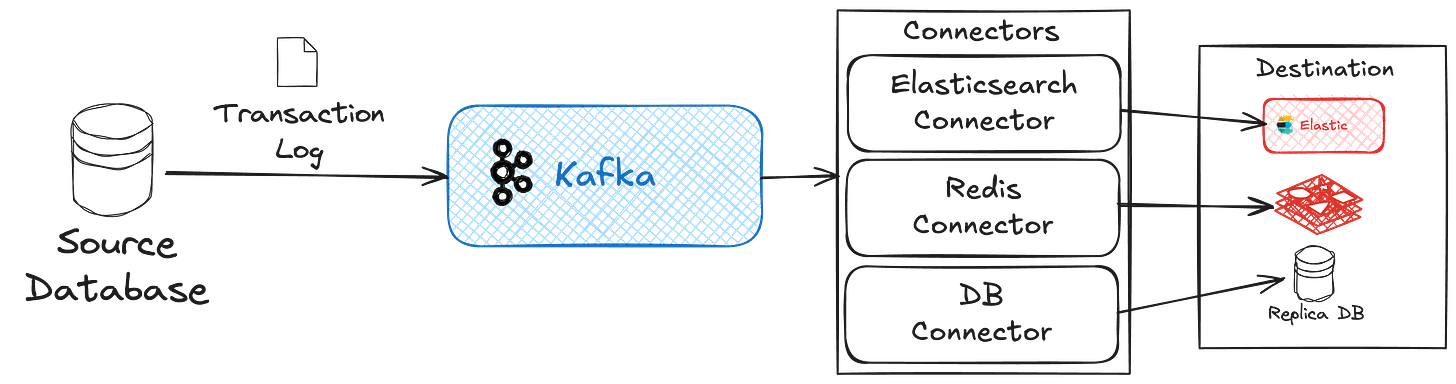

Change Data Capture (CDC) with Kafka: Keeping databases in sync

Kafka can be used to sync source and destination data stores in real-time. In a CDC scenario, Kafka captures real-time changes from source databases via transaction logs. These logs are routed to various sinks such as ElasticSearch, Redis, and replica DBs via respective connectors.

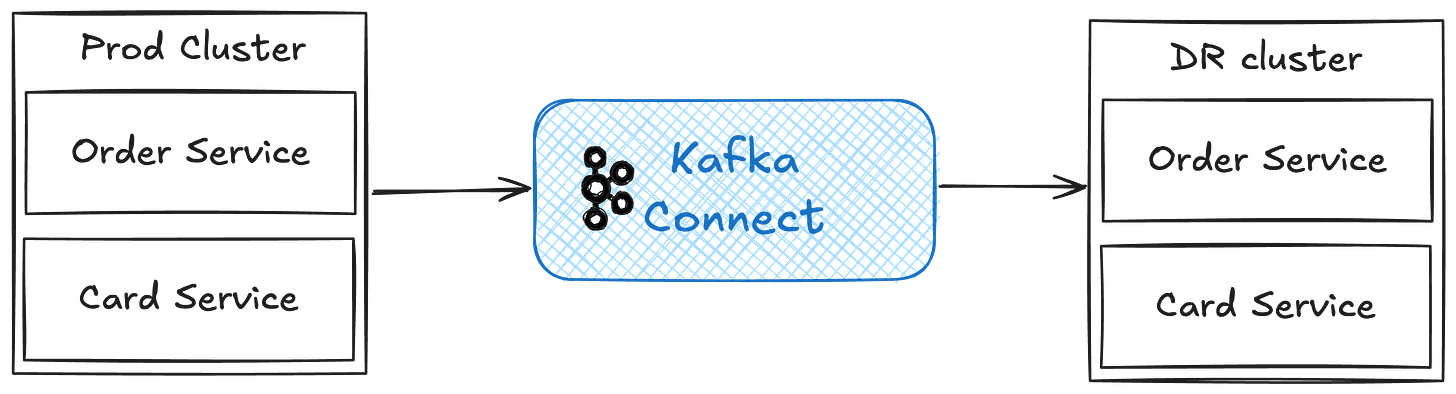

Kafka for system migration

Disaster recovery is essential for large-scale applications where high availability is critical. Kafka supports this by replicating entire production workloads across data centers. With Kafka Connect, large volumes of events can be easily ingested, making it an ideal tool for replicating production data.

Conclusion

This post was more about learning the basics of Kafka along with its most common use cases. In the next post, I will try to cover as many scaling challenges as possible based on my practical experiences with Kafka in a large-scale fintech system. Stay tuned 🤘🏻.

That’s all for today. Thanks for reading!

Liked this article? Make sure to 💙 click the like button.

Feedback or addition? Make sure to 💬 comment.

Know someone who would find this helpful? Make sure to 🔁 share this post.

It's really helpful, thanks

This one is amazing Aniket Singh. Made lots of notes. Waiting for part 2.