A crash course on caching: Distributed Caching & Strategies (Part 2/3)

A Deep Dive into Distributed Caching: Sharding Techniques, Cache Eviction, and Popular Caching Solutions

In What is Caching & How it Works, we covered the basics of caching, how it operates at the hardware level (CPU and OS), and its implementation across various infrastructure components.

Today, we will focus on Distributed Caching, exploring how large-scale systems manage caching effectively. The key topics we'll cover include:

What is Distributed Caching and its advantages?

How does distributed caching work?

What is Consistent Hashing?

Types of caching strategies

Types of cache eviction strategies

Popular examples of distributed caches

If you are a recent subscriber, here’s what you might have missed:

Before we dive deep, here's a distributed caching cheat sheet 🧷.

What is distributed caching?

A naive approach to caching data is to store it in a single-node system, but this method faces several challenges:

Scalability: How to handle the vast volume of data that needs to be cached?

Fault Tolerance: How can we ensure high availability of cached data if one node fails?

Load Balancing: How to handle a high volume of concurrent read requests, such as a Twitter feed accessed by millions of users?

Distributed caching addresses these challenges as systems scale to accommodate millions of users and large datasets.

In a distributed caching system, multiple nodes store replicas of each dataset. This architecture enhances fault tolerance and ensures high availability while allowing load balancing of requests across nodes, enabling the system to efficiently handle millions of reads.

How Distributed Caching works?

Figure 2 shows the components of a distributed cache system and how it works:

Cache Client - When caching data, the client library utilizes a hashing or sharding algorithm to determine the appropriate node or partition for data storage.

Cache Cluster

A dedicated multi-node cluster operates with a master-slave configuration to manage all read and write operations.

Data is replicated across multiple nodes to enhance fault tolerance and ensure high availability.

All write operations are directed to the master node, which determines how to distribute the data to the slave nodes.

Coordination mechanisms, such as distributed locks or consensus protocols, keep cache nodes synchronized, especially during simultaneous data modifications by multiple nodes.

Sharding - Data is divided into shards, each stored on a different cache node. This approach helps distribute data evenly and enables horizontal scaling of the cache.

Caching Strategies - Policies for read and write operations must be established to determine how data is updated between the database and the cache or vice versa.

Eviction Policies - Caches implement eviction strategies, such as LRU (Least Recently Used), LFU (Least Frequently Used), or TTL (Time to Live), to remove outdated or less frequently accessed data and create space for new entries.

Types of sharding algorithms in distributed caching

Sharding algorithms are essential for distributing data across multiple nodes in a distributed caching system. They determine which node to read from or write to. There are 3 types of sharding algorithms:

Modulus Sharding

Modulus sharding involves assigning a key to a shard based on the hash value of the key modulo the total number of shards.

Pros & cons

✅ Uniform Distribution: The modulus function evenly distributes data across shards, avoiding hot spots since the hash output is random.

✅ Simplicity: Easy to implement, as it relies on a simple hash function to determine the shard based on a key.

❌ Scaling Difficulty: Modulus-based sharding complicates adding or removing shards because keys have to be redistributed, causing significant data migration.

❌ Poor Range Queries: Since the sharding is based on the hash of keys, executing range queries (eg: getting keys between 1000 and 2000) becomes inefficient and requires querying multiple shards.

Range-based Sharding

Range-based sharding assigns keys to specific shards based on predefined key ranges. With this approach, the system can divide the key space into specific ranges and then map each range to a particular shard.

Pros & cons

✅ Efficient Range Queries: Range-based sharding optimizes range queries by storing data in specific shards, reducing the need to access multiple shards.

✅ Logical Data Grouping: Related data is stored together, enhancing retrieval efficiency.

❌ Load Imbalance Risk: Uneven data distribution can overload certain shards if many values fall within a specific range.

Consistent Hashing in Distributed caching

Modulus and range-based sharding are easy to manage but struggle with scaling. When a node goes down or a new node is added (a common scenario in distributed systems), keys must be completely redistributed, leading to downtime and complexity.

Consistent hashing (Figure 5) solves this problem by mapping both keys and nodes onto a fixed-size ring using a hash function. This method minimizes key redistribution when nodes change, improving scalability and stability.

How does consistent hashing work?

When a key is requested, the system applies a hash function to map the key to a specific position on the ring.

The system traverses the ring in a clockwise direction from the key's position until it encounters the first node.

This node is responsible for storing the data associated with the key.

Handling failed nodes in Consistent Hashing

When a node in the ring goes down, all keys assigned to that node are reassigned to the next available node in the ring, while other keys remain unaffected.

Figure 6 explains this scenario. When node 4 fails, the key k6 which was previously assigned to node 4, is reassigned to the next node in the ring i.e. node 1.

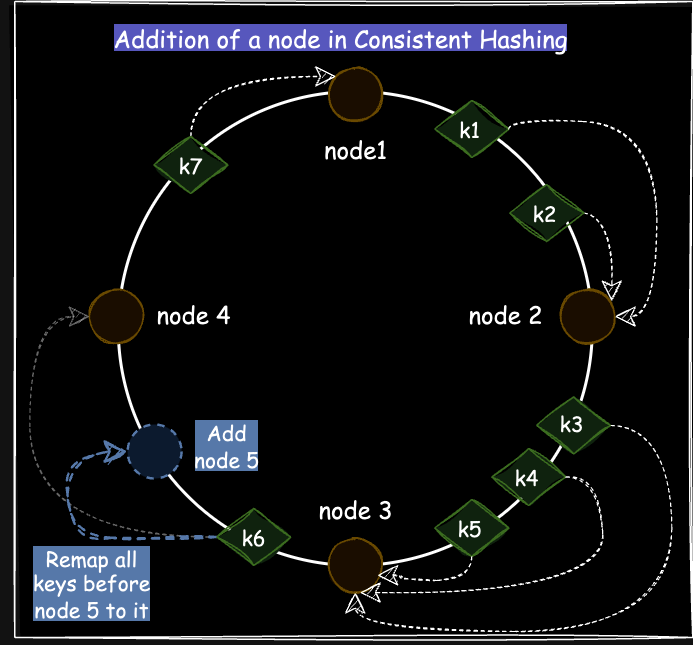

Handling new nodes in Consistent Hashing

When a new node is added to the ring, all keys that lie between this new node and the previous node are remapped to the new node, while keys assigned to other nodes remain unaffected.

Figure 7 explains this scenario. When a new node (node 5), is added to the cluster, the key k6, previously assigned to node 4, is now reassigned to the new node 5.

Types of Caching Strategies for Distributed Systems

There are five types of caching strategies, categorized into two subtypes based on their focus: read strategies and write strategies.

Read strategies: Cache Aside, Read Through

Write strategies: Write Around, Write Back, Write Through

In the following sections, we will explore each strategy in detail and discuss their pros & cons.

Cache Aside

The application requests data for a key from the cache.

If the key is present in the cache (cache hit), it is returned to the application.

If key is not present in the cache (cache miss), the application:

Fetches data from the main DB.

Updates the cache with latest data for future reads.

Pros & Cons of cache aside

✅ The system can tolerate cache failures, as it can still read from the storage.

❌ The application must manage both cache and storage, complicating the code.

Read Through cache

The application requests data for a key from the cache.

If the key is present in the cache (cache hit), it is returned to the application.

If key is not present in the cache (cache miss), the cache:

Fetches data from the main DB.

Updates itself with the latest data and returns it to the application.

Pros & Cons of read-through cache

✅ The implementation is simple, as the application only needs to manage cache (not storage).

❌ The system cannot tolerate cache failures as it doesn’t rely on DB.

❌ The cache populates only after the first request, leading to slower initial access for new data— an issue for high-throughput systems that need pre-warmed caches.

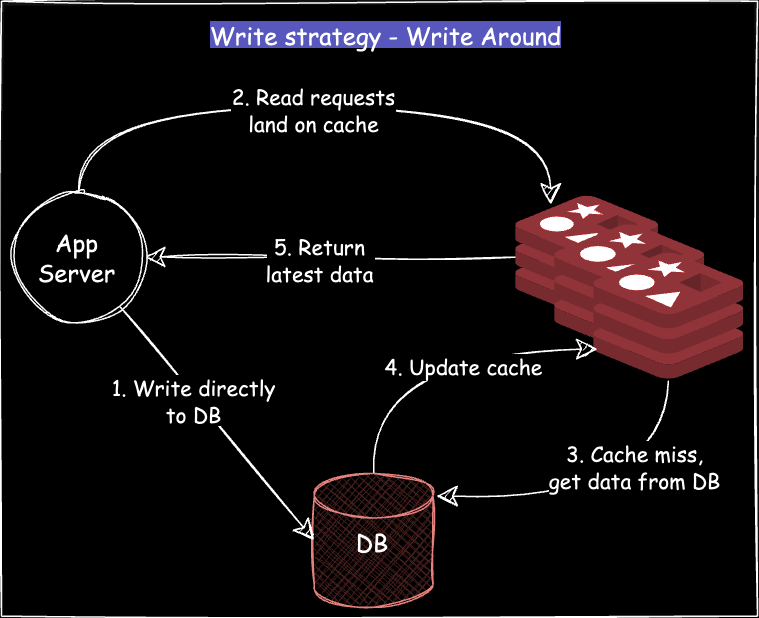

Write Around cache

All the write requests go directly to the DB and read requests land on the cache.

If the key is present in the cache (cache hit), it is returned to the application.

If key is not present in the cache (cache miss), the cache:

Fetches data from the main DB

Updates itself with the latest data and returns it to the application

Pros & Cons of write around cache

✅ Cache contains the data that it really needs (frequently read).

❌ Frequent cache misses for recently written data can cause inconsistent read performance, as data may need to be fetched from the database.

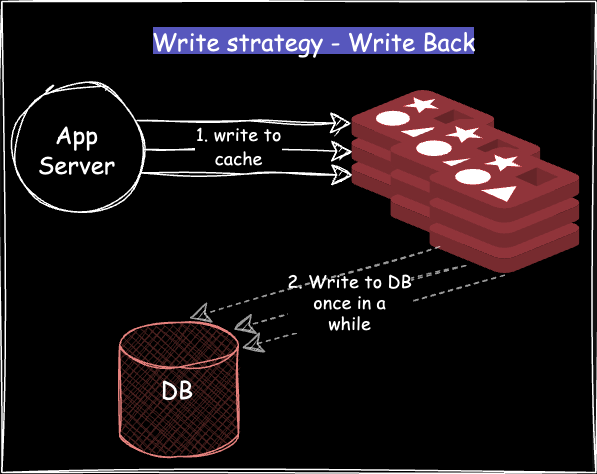

Write Back cache

All the data is written directly to the cache.

The actual write to the DB happens later, asynchronously, or when the cache data is evicted.

Pros & Cons of write-back cache

✅ Writes are faster since they are only performed in the cache, deferring the actual database write.

✅ Multiple writes to the same data can be combined into a single database operation, reducing the number of I/O operations.

❌ The cache holds the most recent version of the data, so if other services access the database directly, they might see stale or outdated data.

Write Through cache

All write requests from the application land on the cache.

The actual write to the DB also happens in sync before returning response to the application.

Pros & Cons of write-through cache

✅ Since writes are performed in both the cache and the database simultaneously, the cache is always in sync with the DB, ensuring data consistency.

✅ By writing data to the cache during writes, future reads benefit from cached data, enhancing read performance.

❌ Every write operation incurs the overhead of writing to both the cache and the database, which can slow down the write performance.

Cache eviction strategies in Distributed Caches

Cache eviction is crucial for maintaining fresh and relevant data. There are four main types of cache eviction policies:

Least Recently Used (LRU)

LRU evicts the cache entry that has not been used for the longest time. It assumes that data that hasn't been accessed in a while is less likely to be needed soon.

Pros & Cons

✅ Adapts well to scenarios with temporal locality (data accessed recently is likely to be accessed again soon).

❌ Requires tracking the usage order of all items, which can be computationally expensive for large caches.

Example: Web browsers often use LRU to manage their cache of visited web pages. The most recently visited pages are kept in the cache, while the least recently accessed pages are evicted to make space for new ones.

Least Frequently Used (LFU)

LFU evicts the items that are accessed the least number of times. It tracks the frequency of access to each cached item and removes those that have been used the least over time.

Pros & Cons

✅ Works well in scenarios where frequently accessed data is important (product details on an e-commerce website).

❌ Harder to implement as it requires tracking access counts.

Example: Search engines use LFU in their query caching mechanisms to keep the most frequently searched queries cached, allowing fast retrieval for popular searches.

First In First Out (FIFO)

FIFO evicts the oldest item in the cache, based on when it was added, regardless of how often it has been accessed. The first item added is the first item removed.

Pros & Cons

✅ Simple to implement since it doesn't require tracking access times or counts.

❌ Doesn’t account for how often or how recently an item is used, which can lead to suboptimal cache efficiency.

Example: Message brokers like RabbitMQ may use FIFO in their in-memory message cache, where older messages are evicted to ensure space for newer ones without considering usage patterns.

Time To Live (TTL)

TTL evicts items automatically from the cache after a predefined time period, regardless of how frequently or recently the item was accessed. It’s a time-based expiration method.

Pros & Cons

✅ Useful when data is time-sensitive, such as temporary data or cached results that may become invalid after a certain period.

❌ Items may still be relevant when evicted if they haven’t been accessed frequently during the TTL window.

Example: User session tokens can be cached with a predefined TTL (eg: 5 mins). Once the TTL expires, the token is evicted from the cache, and a new session token must be generated.

Popular distributed caching solutions

Redis and Memcached are two most popular open-sources distributed caches. A quick comparison matrix between them:

Additionally, Amazon ElastiCache is a fully managed in-memory caching service from AWS that supports both Redis and Memcached, giving developers flexibility in choosing the right engine for their use case.

It offers multi-AZ (Availability Zone) deployments with automatic failover, ensuring high availability even during an AZ outage.

ElastiCache can also auto-scale the number of nodes in the cluster based on traffic demand, enabling seamless handling of fluctuating workloads without manual scaling efforts.

That’s all for today. Thanks for reading 🙏🏻!

In the next issue, we will explore key challenges with caches & how to solve them, how to implement caching (with code snippets), & key gotchas about how NOT to do caching.

Creating each article, along with the accompanying diagrams, takes about 12-14 hours of research and hard work. If you enjoy my content, please ❤️ and subscribe to encourage me to keep creating more such valuable content! 🙏🏻.

Insightful, well explained 👏. Really enjoys reading your post keep sharing quality contents.