EP 9 | What are Load Balancers ⚖️?

Hi, Aniket here 👋🏻 and welcome to my System Design Newsletter 😊!

Over the years, load balancers have become an essential pillar of system architecture, serving as the front-line controllers that intelligently distribute incoming network traffic across multiple servers.

Similar to an air traffic controller coordinating the safe landing of planes by assigning them to available runways, load balancers regulate data flow within a system, ensuring horizontal scalability and optimal resource utilization.

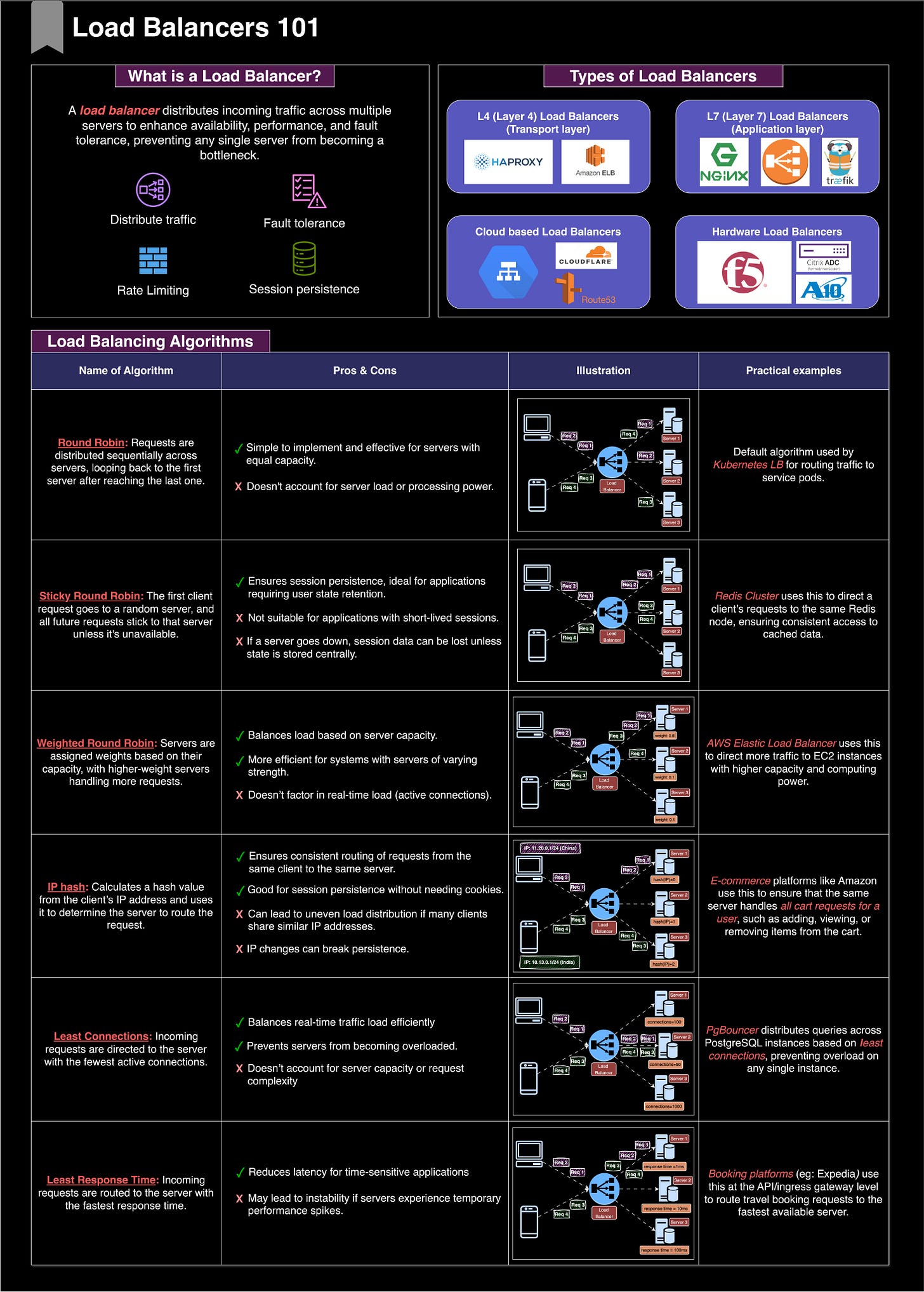

Before we dive deeper, here's a quick cheat sheet summarizing today’s article 🧷

Let’s understand each component in detail:

Functions of a load balancer

Distribute Traffic: Load balancers evenly distribute incoming requests across multiple servers to prevent overloading any single server.

Fault Tolerance: They detect server failures and redirect traffic to healthy servers, ensuring continuous service availability.

Rate Limiting: Load balancers can control traffic flow by limiting the number of requests sent to servers, preventing overload.

Session Persistence: They maintain session data by consistently routing a user’s requests to the same server for the duration of a session.

Types of Load Balancers

Layer 4: Operates at the transport layer, distributing traffic based on IP addresses and TCP/UDP ports. Eg: HAProxy, AWS ELB

Layer 7: Works at the application layer, routing traffic based on content such as HTTP headers and URLs. Eg: Nginx, Traefik, AWS ALB

Cloud-Based: Load balancers hosted in the cloud, offering scalable, on-demand traffic management without hardware overhead. Eg: Cloudflare, AWS Route 53

Hardware: Physical load balancers deployed on-premises, providing high-performance traffic distribution with dedicated infrastructure. Eg: F5, A10, Citrix ADC

Types of load balancing algorithms

1. Round Robin

How does it work?

Requests are distributed sequentially across servers, looping back to the first server after reaching the last one.

In Figure 2, requests 1, 2, 3 and 4 go to servers 1, 2, 3 and 1 respectively.

Pros & Cons

✅ Simple to implement and effective for servers with equal capacity.

❌ Doesn't account for server load or processing power.

Example: Default algorithm used by Kubernetes Load Balancer for routing traffic to service pods.

2. Sticky Round Robin

How does it work?

The first client request goes to a random server, and all future requests stick to that server unless it's unavailable.

In Figure 3, requests 1 & 2 go to server 1 while requests 3 & 4 go to server 2

Pros & Cons

✅ Ensures session persistence, ideal for applications requiring user state retention.

❌ Not suitable for applications with short-lived sessions.

❌ If a server goes down, session data can be lost unless state is stored centrally.

Example: Redis Cluster uses this to direct a client’s requests to the same Redis node, ensuring consistent access to cached data.

3. Weighted Round Robin

How does it work?

Servers are assigned weights based on their capacity, with higher-weight servers handling more requests.

In Figure 4, Out of 4 requests, 3 requests (0.8 * 4 = 3.2) go to server 1 and 1 request (0.1 * 4 = 0.4) goes to server 2.

Pros & Cons

✅ Balances load based on server capacity.

✅ More efficient for systems with servers of varying strength.

❌ Doesn’t factor in real-time load (active connections).

Example: AWS Elastic Load Balancer uses this to direct more traffic to EC2 instances with higher capacity and computing power.

4. IP Hash

How does it work?

Calculates a hash value from the client’s IP address and uses it to determine the server to route the request.

In Figure 5, requests 1 & 2 are from same IP in China, hence go to server 1 where hash(IP)=0, while requests 3 & 4 from same IP in India go to server 3 where hash(IP)=2

Pros & Cons

✅ Ensures consistent routing of requests from the same client to the same server.

✅ Good for session persistence without needing cookies.

❌ Can lead to uneven load distribution if many clients share similar IP addresses.

❌ IP changes can break persistence.

Example: E-commerce platforms like Amazon use this to ensure that the same server handles all cart requests for a user, such as adding, viewing, or removing items from the cart.

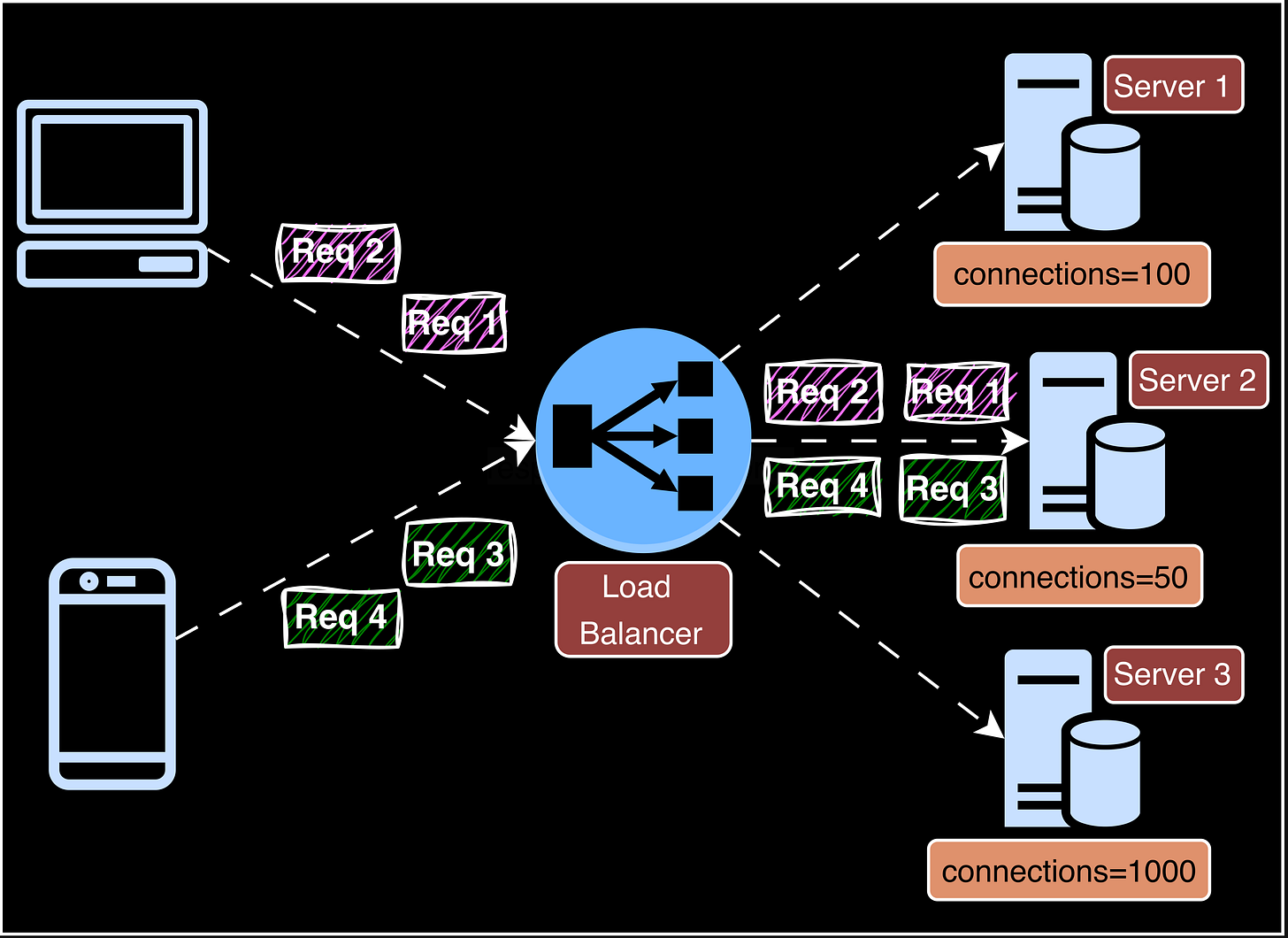

5. Least connections

How does it work?

Incoming requests are directed to the server with the fewest active connections.

In Figure 6, all requests go to server 2 because it has the least no of client connections (50)

Pros & Cons

✅ Balances real-time traffic load efficiently.

✅ Prevents servers from becoming overloaded.

❌ Doesn’t account for server capacity or request complexity.

Example: PgBouncer distributes queries across PostgreSQL instances based on least connections, preventing overload on any single instance.

6. Least Response Time

How does it work?

Incoming requests are routed to the server with the fastest response time.

In Figure 7, all requests go to server 1 because it has the least current response time (1ms)

Pros & Cons

✅ Reduces latency for time-sensitive applications.

❌ May lead to instability if servers experience temporary performance spikes.

Example: Booking platforms (eg: Expedia) use this at the API/ingress gateway level to route travel booking requests to the fastest available server.

So far so good. I will publish separate deep-dive diagrams on load balancers in upcoming posts!

Also, the next post will be about something exciting and a bit more challenging 😉! Stay tuned and don’t forget to subscribe to support my work 🙏🏻.